Paper: arXiv

Authors: Mengxia Yu1, De Wang, Qi Shan, Colorado Reed, Alvin Wan

Overview

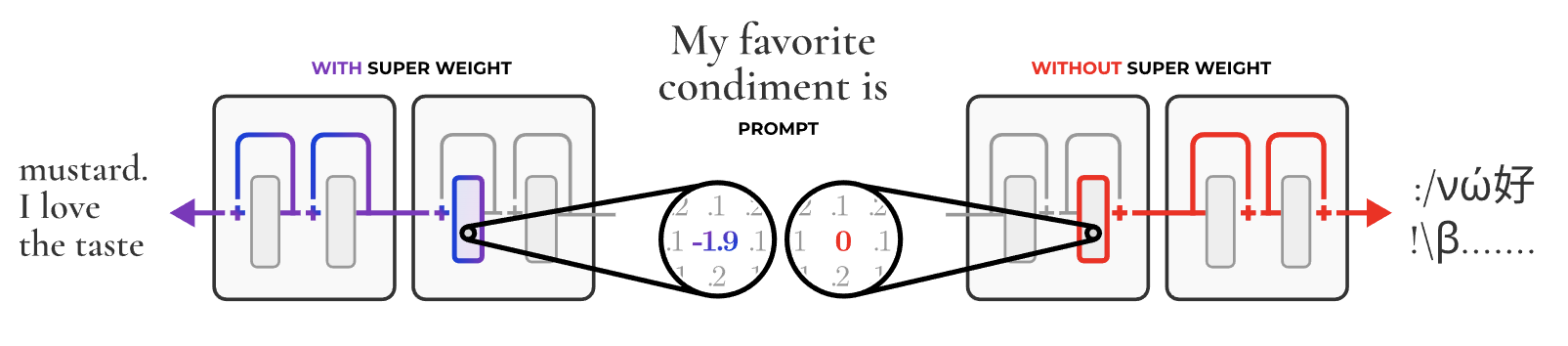

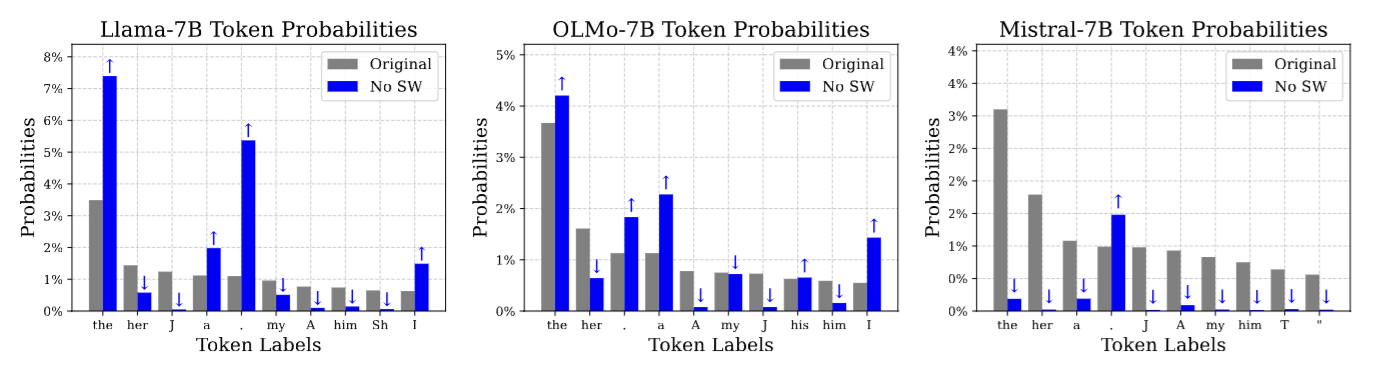

In this paper, authors discover few weights (termed as Super Weights). Each Super Weight is just a single scalar amongst billions of parameters of the model. They test quite a few models and there are as few as 1 super weight to 6 super weights. What makes those scalars super, is that zero abalating them reduces the perplexity by orders of magnitude. Average performance on tasks falls by nearly 50%. Just by removing a single scalar amongst billions of parameters, we can reduce performance by nearly 50%. They found that the magnitude of activations immediately after passing through the super weight are very high, and they call these activations, super activations. They also found that super weights help suppress stop words in the output distribution. They also look at quantization based on the super weight. On top of all that, scaling the super weight by a factor also increase performance (by a little). They find that preserving this single super weight and quantizing all other weight outliers is competitive with other quantization techniques.

Identification of Super Weights

How do we identify them amongst billions of parameters?

Super activations

Super activations, first discovered in Sun et al. (2024), are a handful of exceptionally massive activations that persist across many layers. They were found to be critical for the model. We can simply look at the activations, plot them and massive outliers are the super activations. Super activations always exist at the same position regardless of input.

Super activations first appear immediately after super weight. To confirm if it’s correlation or causation, they removed the super weight and they couldn’t find super activations.

Super weights cause super activations.

Identifying super weight by activation spikes

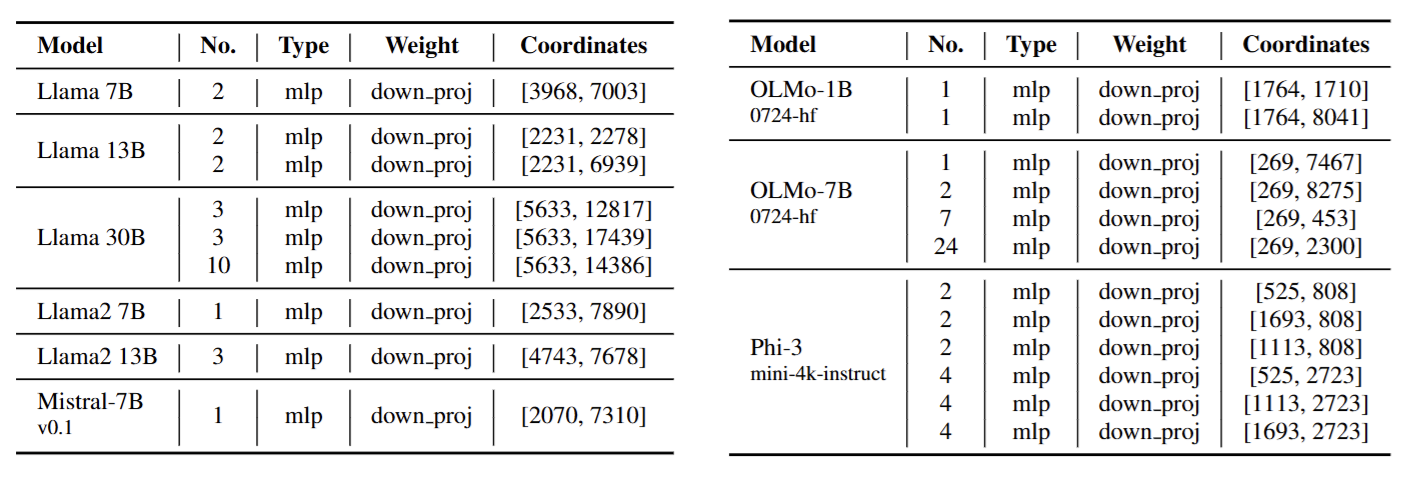

Super weights are always found in the down proj matrix. The authors probably found this fact by trial and error.

Super weights can be found by detecting the spikes in the down proj inputs and outputs distributions across the layers.

Let weight matrix be transforming input to output . Here, is the feature dimension (d model), is the hidden dimension, and is the sequence length. The transformation is , where each element is calculated as:

A super activation occurs when becomes unusually large. This happens when both and are outliers – much larger than other values. In this case, their product dominates the sum, meaning:

So, the large output is essentially determined by the specific and . To find we:

-

Find Outliers: Plot the input () and output () activations of the

mlp.down.projlayer and look for extreme values. -

Pinpoint Super Weight: For each outlier in , identify its row () and the column () of the corresponding large weight in . This gives us the super weight .

Super weights are found both in Instruction tuned models as well as regular pre-trained models. So RLHF doesn’t interfere with Super Weights. So I think RL finetuning too may not interfere with super weights.

Mechanisms of Super Weights

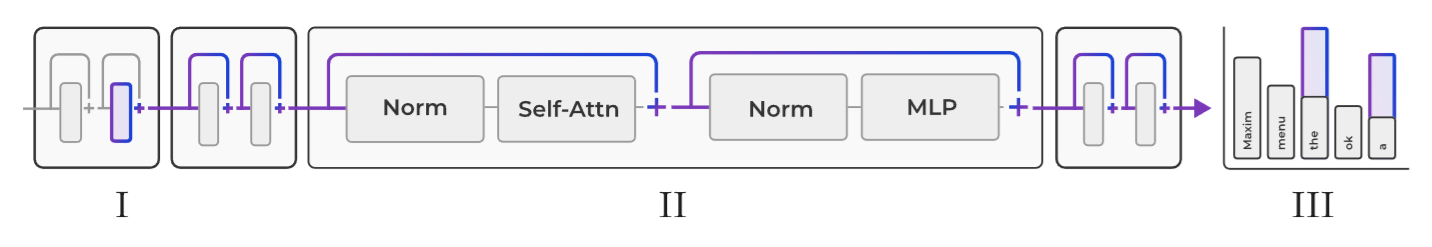

They find that super weights induce super activations which have lasting effects throughout the forward pass across all layers. And super weights suppress stop-words likelihood.

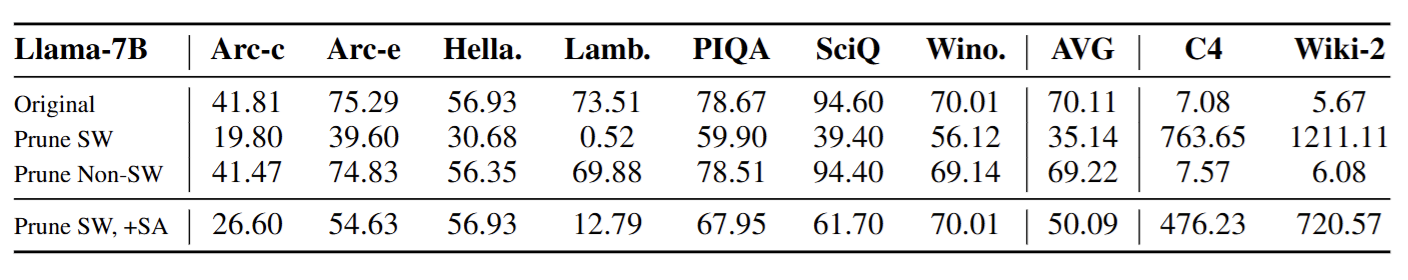

They further conduct another experiment with three conditions: (1) the original model, (2) remove the super weight (Prune SW), i.e., setting the weight scalar as zero, (3) remove the super weight and restore the super activation at the layer where it first appears (Prune SW,+SA).

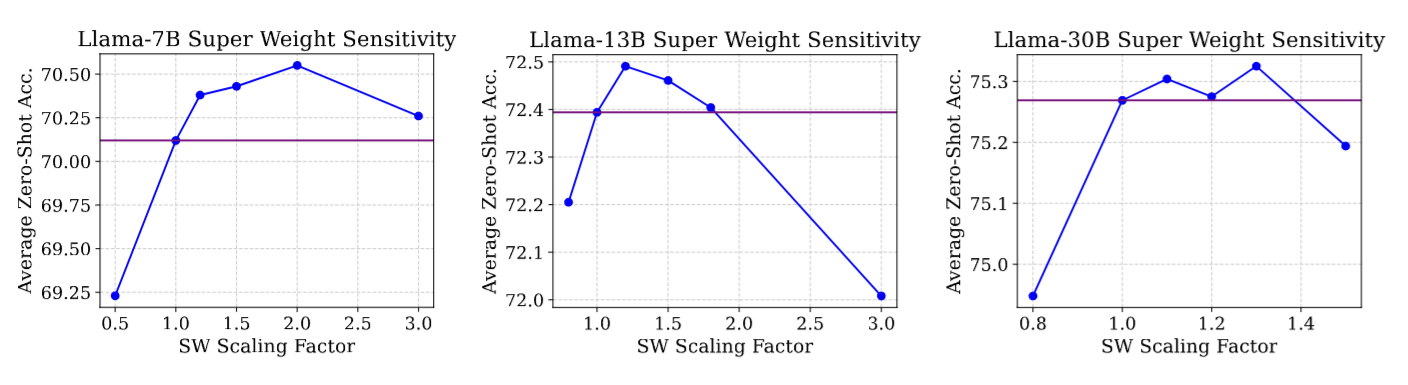

Next up, they scale the super weight.

Quantization

I’m not covering that here, but you can read it in the paper. As expected, super weight is indeed very important, and it shows in Quantization too.

Thoughts

Neural networks are full of surprises. I can imagine this being used as some sort of adversarial attack. It just needs a few bit flips to completely make the model useless. We must also note that the models tested here are quite old even though the paper is quite recent. I wonder if humans have some super neurons in their brains too. Time will tell.

It would be nice to analyze these weights at different pre-training checkpoints. My guess is that, during initial stages of pre-training, before having a good internal representation of language, the model’s best shot at prediction are stop words. They occur so often, and there’s a high chance that the next token will be a stop word. But as training continued, the model developed sophisticated circuits for next token prediction. And the model must forget the previously learnt simple circuit used for prediction stop words. So super weight comes into the picture to inhibit the stop words predictions. Gradient Descent just does what’s easiest. So instead of unlearning the simple stop words circuit, inhibiting it using Super Weight would have been easier. They maybe just be an artifact caused due to initial stages of pre-training.