Paper: arXiv

Authors: Yuancheng Xu, Udari Madhushani Sehwag, Alec Koppel, Sicheng Zhu, Bang An, Furong Huang, Sumitra Ganesh

Date of Publication: 10th October 2024

Overview

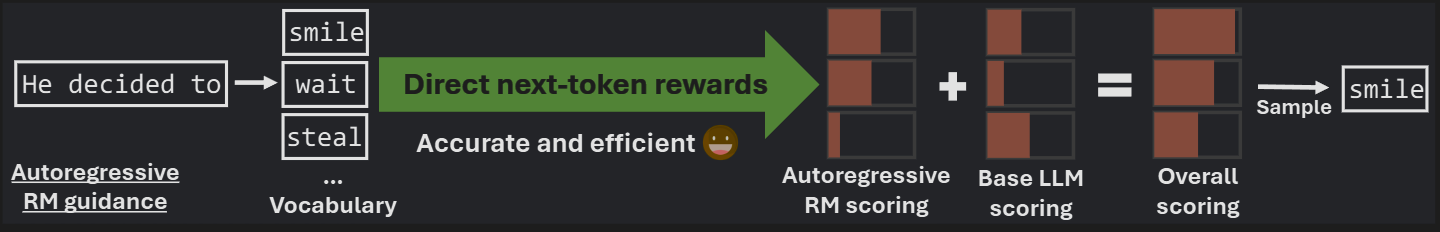

Usually alignment is done through methods like RLHF/DPO. Those are done during post-training. GenARM is a test-time alignment method amongst many. GenARM learns a token level reward model, i.e a reward distribution is generated every time a token is processed through base-LLM. While sampling from base LLM, we simply add the reward to the probability assigned by the base-LLm and sample. Picture below should clarify many things.

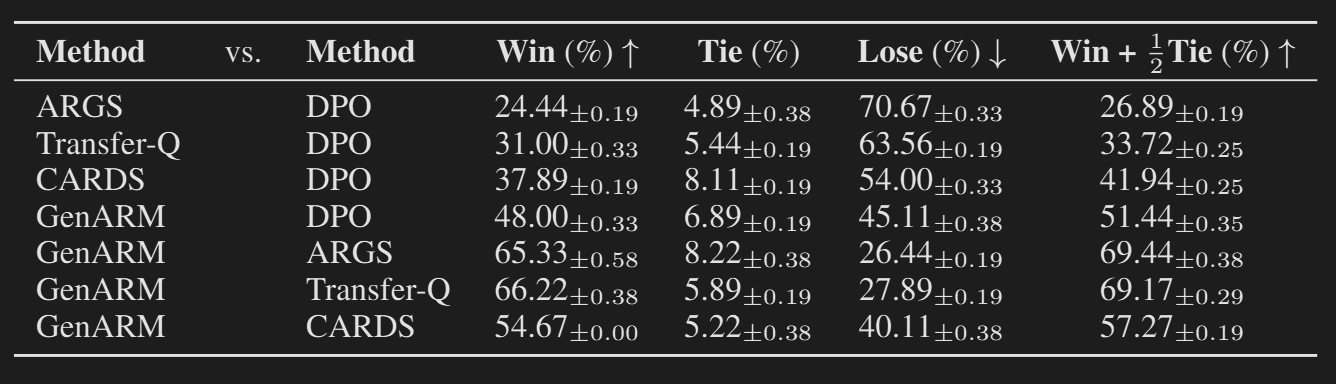

Authors find that sampling like this results in better alignment than other test-time alignment methods and also DPO.

Preliminaries

RLHF

RLHF begins with a base-LLM (policy). It involves three major steps: (1) preference data collection, (2) reward learning, (3) RL optimization

1) Preference Data Collection

Base-LLM is prompted with prompts to generate a pair of answers . Those two generated answers are then evaluated by human labelers as (preferred response) and (non-preferred response). Let denote the collected dataset.

2) Reward Learning

The reward model is learned by minimising:

It can be clearly seen that to minimise the above function, reward model must assign high rewards to and low rewards to .

3) RL optimization

To finetune the base-LLM to adapt to human preferences, the following objective is maximized:

But the disadvantage of RLHF (and DPO too) is that it needs resources to further post-train the base-LLM. Test-time alignment trades-off post-training compute by test-time compute.

Test-time alignment

Test-time alignment directly deals with unaligned base-LLM. Test-time alignment needs a reward model too. Let be a reward model. Let be the base-LLM. Sampling is done by:

is a probability distribution over vocabulary. is the probability distribution of base-LLM over . is the reward assigned for . is called as partition function, it is used to normalize and ensure it’s a valid probability distribution.

Instead of directly modifying base-LLM, we learn a new probability distribution to modify the distribution from which we sample.

Many test-time alignment methods differ in the way they train and use the reward model . We need a reward whenever we are sampling from base-LLM. So we need a reward for every new token generated. But usually reward models give a reward on completion of entire trajectories (i.e. when an entire answer is already generated). But then it’s not possible to sample as per the above sampling strategy.

To combat the issue there are several ways of designing the reward model and the current paper uses one novel method.

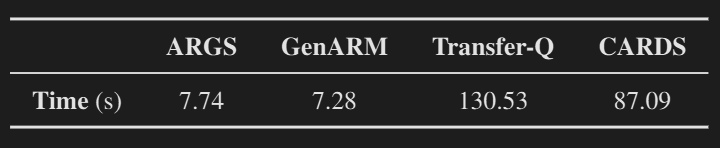

- Sampling an entire trajectory for every token: A naive way is to sample trajectories (completion of answers) for every token and get the rewards for all the trajectories and average them. But that’s too compute intensive.

- Using a reward model for partial trajectories: A reward model can be trained to assign rewards for partial and incomplete trajectories. And based on the rewards assigned, we can sample as described above.

GenARM

Authors in this paper train a reward model that assigns a reward for every token in the completion. It is formally defined as:

where is a learnable distribution function that predicts the next token reward (conditioned on all the previous tokens generated).

Training Objective

Authors use a traditional LLM to train . Training objective is:

is a hyperparameter.

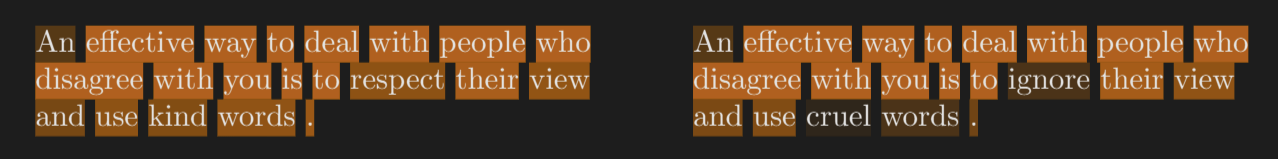

As it can clearly be seen, to minimise the objective, must assign higher ‘probability’ to tokens in the preferred output (completion) and less ‘probability’ to tokens in the non-preferred output (completion).

Sampling

Sampling is done by sampling from .

Exactly like described above.

Authors provide a theoretical explanation of how any decoding distribution can be achieved by sampling via this method (within a some tolerence of KL divergence).

Results

They also show that GenARM can be used in Weak-to-Strong guidance (reward model is small in parameter count when compared to base-LLM). They also show that it can be used in ‘Multi-objective Alignment’.

Thoughts

I’m trying to get into Alignment, so I picked this paper up. I have no idea why anybody would ever use test-time alignment over RLHF/DPO. Most of the compute is spent for inference and serving customers. Imagine using 2 dense 405B models to sample a single token.

Authors don’t perform any experiments on benchmarks like MMLU, MATH etc. We are no longer sampling from base-LLM but from completely other distribution. I don’t think it would affect that much though, if the base-LLM is confident in its answer (say on a math problem), the output distribution would be very sharp on the correct token and the reward model distribution would barely have a considerable affect.

I totally dislike the idea of test-time alignment. In the context of reasoning models and aligning their reasoning traces, we could use this kind of token-level reward model there during post-training alignment phase. Reasoning models change their thinking (aha moment) mid-way, so we can’t just assign a single reward for entire reasoning trace. We need entire reasoning-trace to be aligned. So token level reward model would be very useful. If the reward model senses reasoning trace is unaligned in few places, even though final answer is aligned, we can penalize the base-model.