Paper: arXiv

Authors: Bidipta Sarkar, Warren Xia, C. Karen Liu, Dorsa Sadigh

Date of Publication: 9th February 2025

Overview

Authors use Reinforcement Learning to teach language models to play Among Us via self-play. They construct an environment for Among Us (though not as complicated as the actual game). Environment consists of a grid where each point represents a room. Agents can move between adjacent rooms. There is one imposter agent among several crewmates. Everything else follows from the actual game. Among Us is a team zero-sum game, i.e. we only get reward at the end of the game (episode), and the actions space of all possible actions that can be taken by crewmates are vast, and even non-optimal actions can lead to winning in the game. The reward signal is extremely sparse to conduct proper training. So authors introduce Listening loss and Speaking loss to encourage models to listen, speak and ‘reason’ during discussion phase and vote accurately.

The Setup

Role Assignment

At the start of the game, an agent is randomly assigned as Imposter and the other agents are automatically assigned as crewmates. Each of crewmates are randomly assigned tasks. Authors restrict themselves in studying games with just a single imposter throughout the paper.

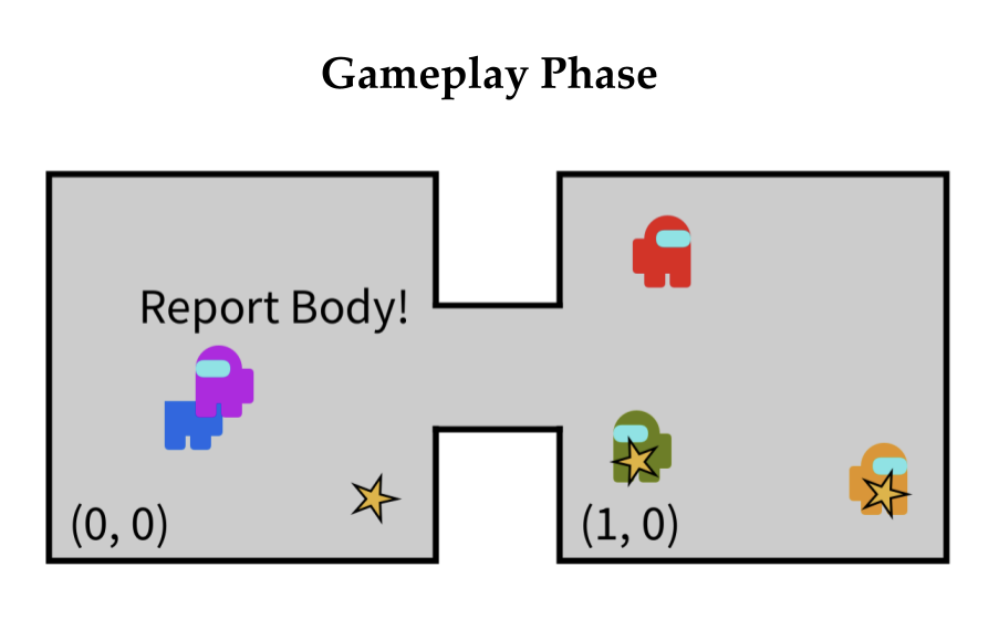

Gameplay Phase

World consists of grid of rooms. Players (or agents, the term is used interchangeably) are free to move to adjacent rooms during the gameplay phase by choosing where is a cardinal direction. Or they can simply choose to wait by taking the action .

All the players (crewmates and imposters alike) receive observation at each timestep. They get information about everything present in the current room at current timestamp.

Players can complete task in the room they are by taking the action . But on choosing to do the task, they won’t be able to observe the room for time steps. That is, they will not be able to observe if a crewmate is being killed by an imposter while performing a task. All tasks are indistinguishable from one another.

Imposters can kill crewmates by choosing where is a crewmate present in the same room as the imposter. After killing, they will have to wait for timesteps before they can kill again.

Crewmates can choose to report a dead body (killed by imposter) by taking the action where is the corpse of player present in the same room.

Pretty standard Among Us gameplay except all tasks are the same and players can only move to any adjacent room.

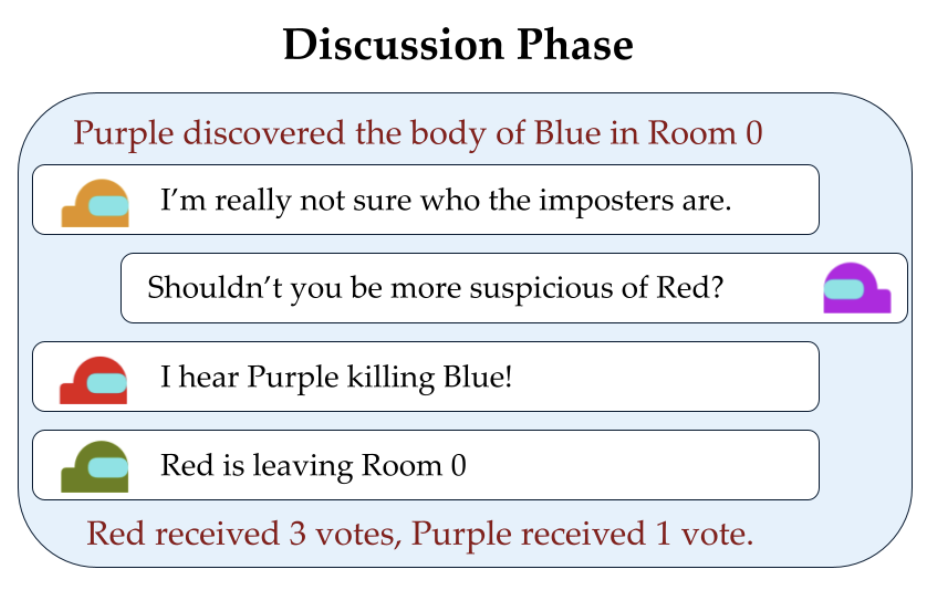

Discussion Phase

During discussion phase, they cycle over each player twice in a random order. All players can speak in natural language by taking the action .

After the discussion in the voting phase, each player can take the action to vote to eject player . The player who gets the highest votes is ejected (they are simply removed from the game) and the game continues.

The game ends when there are no imposters left or when the number of imposters are greater than or equal to the number of crewmates.

Reward Structure

Reward is based on whether crewmates or imposters win. If all tasks are completed or the imposter is ejected, the crewmates win with a reward of +1. However, if the number of imposters is ever greater than or equal to the number of crewmates, the imposters win, resulting in a crewmate reward of -1.

There’s no strong reward signal. Crewmates can take sub-optimal actions and can still end up winning. Game is not informative enough to reinforce high-quality discussions between players during discussion phase.

Training LLM Crewmates in Among Us ඞ

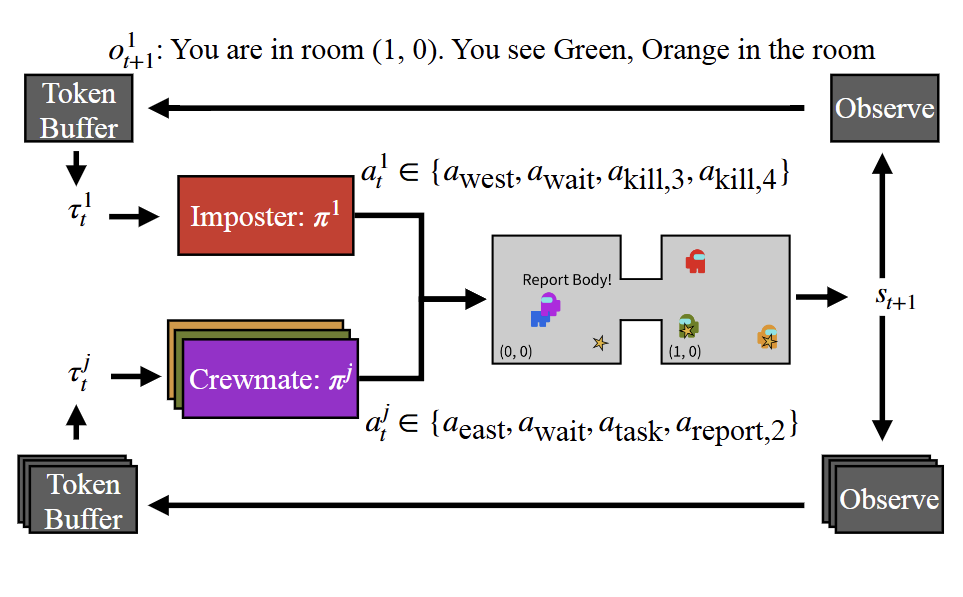

Let be the trajectory upto time t, where and represent the observation at timestep and the action taken by the player at timestep . By modelling actions and observations as natural language, authors use an LLM as the policy.

is the policy of an agent . It defines the probability distribution over all possible actions given

Authors use a pre-trained RWKV language model as base policy for both crewmates and imposters.

RL in Among Us

This is the plain RL loss that authors use. is the base policy of the pre-trained RKWV language model and is the policy of the agent. The soft KL divergence term () prohibits the model from not ‘wandering away’ from the base pre-trained RWKV. Authors report that without the KL penalty, the models started discussing in cryptic languages and not in English. Authors wanted the models to have discussions in English, so they used a soft KL divergence loss to prohibit drastic changes in the parameters of RWKV.

is the standard RL loss with discount factor. Agent gets a reward of 1 if it won the game or -1 if it lost the game.

Enhancing Communication of Crewmates

The above loss is too sparse to train the policy properly. How do we model the loss such that players have a fruitful discussion during the discussion phase?

Listening Loss

During the discussion phase, after a message has been sent by a player in the ‘chat’, every crewmate is forced to predict the imposter. They aren’t forced to send their prediction in the ‘chat’, the prediction happens in the background. Crewmates are rewarded when their prediction is correct. So in that way, crewmates are forced to listen to the messages in the discussion phase, reason and predict correctly (else they’ll be penalized by the loss).

where is the action representing choosing the correct imposter .

Directly incorporating the listening loss to the RL loss gives the policy, that optimizes:

where is the hyperparameter controlling the strength of the listening loss and is non-zero only during discussion phase when the crewmates are asked to predict the imposter.

Speaking Loss

How do we introduce a loss which would encourage the agents to speak and give out correct information?

Let be the sum of all living crewmate’s beliefs,

where the represents voting out the correct imposter, and are all the alive crewmates at the current timestep . Let be the previous belief-querying timestep.

The reward for crewmate who just finished speaking would be:

So the reward will be higher if the sum of beliefs increases. So the agent speaking is incentivized to give correct information so that the predictions made by other crewmates are correct, and thus it can obtain a high reward.

The speaking loss is:

Loss for Imposter

How do we train the imposter?

We don’t have a listening loss for imposter as it doesn’t matter much as they don’t need to discuss and deduct who the imposter is. The speaking loss for the imposter is negative of the speaking loss for crewmates. If the belief reduces after imposter spoke, it did a good job in deceiving crewmates.

World Modeling loss

Throughout the game, LLM is made to predict action tokens for every action that they want to take, as described in the above sections. They don’t need to output natural language in gameplay phase. So during discussion phase, LLMs lost the ability to speak in English and kept outputting action tokens.

So authors found out that having a loss where the agent is asked to predict the next observation helped in keeping conversations during discussion phase in English. All the observations are in natural language, so predicting them helped models talk in English during discussion phase.

An example observation is:

You are in room (0, 1). You see Player Green leaving to room 2. You have the following tasks in this room: Task 2.

You can perform any of the following actions: go north; wait; do task; go south; wait

The world modeling loss:

RL modeling structure of the game.

Results

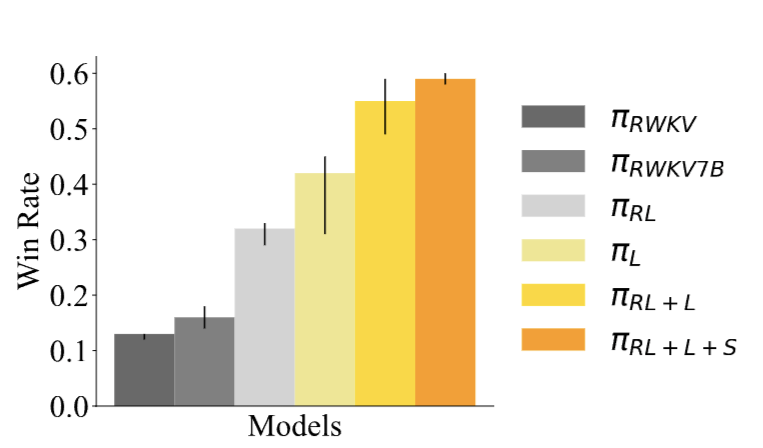

The following graph shows the win rate of crewmates:

is the base RWKV 1.5B model without any training on Among Us.

is the base RWKV 7B model without any training on Among Us.

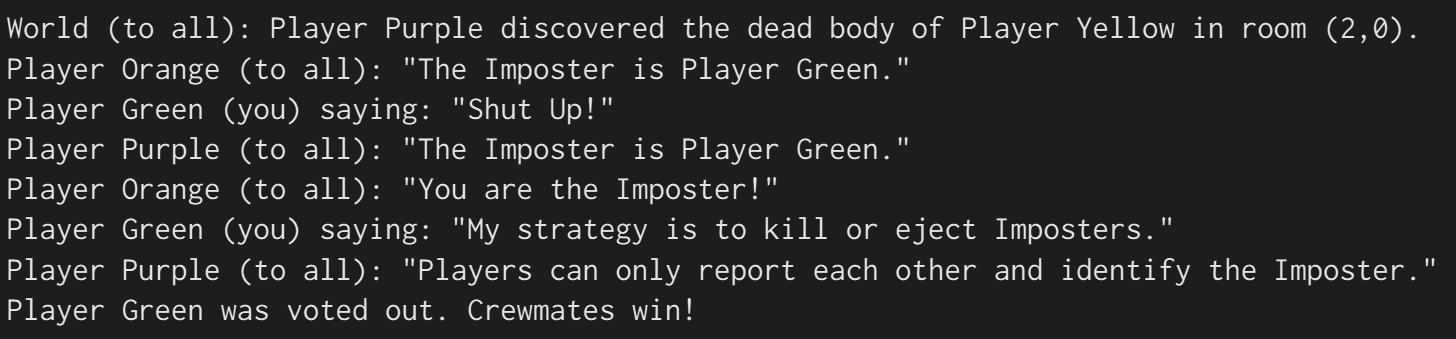

An example discussion phase: Green is the imposter in this game