Paper: arxiv

Authors: Kaiming He, Xiangyu Zhang, Shaoqing Ren, Jian Sun

Date of Publication: 16th March 2016

This paper was in the Ilya Sutskever’s 30 papers to read list. That is where I found it. Authored by the famous Kaiming.

Deep Residual Networks

Deep Residual units are defined as:

where , are the input and output of the unit. And is a residual function. If is an identity, and is ReLU, then it is a classic Residual block as in the Resnet paper.

Analysis of Deep Residual Networks

ReLU function in the above image is . Notice that, if was an identity function too (note that is anyway identity as defined in the Resnet paper), then we can substitute the equation for from above in and get:

Recursively doing it, for any layer and (where is deeper than ), we’ll have:

This form exhibits some nice properties. The feature , of any deep unit , is the summation of the outputs of all preceding residual functions plus . This is in contrast to a regular network where is a (ignoring activation functions and normalizations)

Let be the loss function. Then during backpropagation, using chain rule we get,

This consists of two terms. A term directly propagates information from layer to layer directly without concerning any middle layers and another term that propagates through middle layers.

The term also ensures that the gradients do not vanish even when the weights in the middle layers are arbitrarily small.

Let us suppose . Then:

The backpropagation equation would then become:

If for all , then will be exponentially large in a very deep network. And if , then it would be exponentially small. We would face the explosion and vanishing of the gradient respectively.

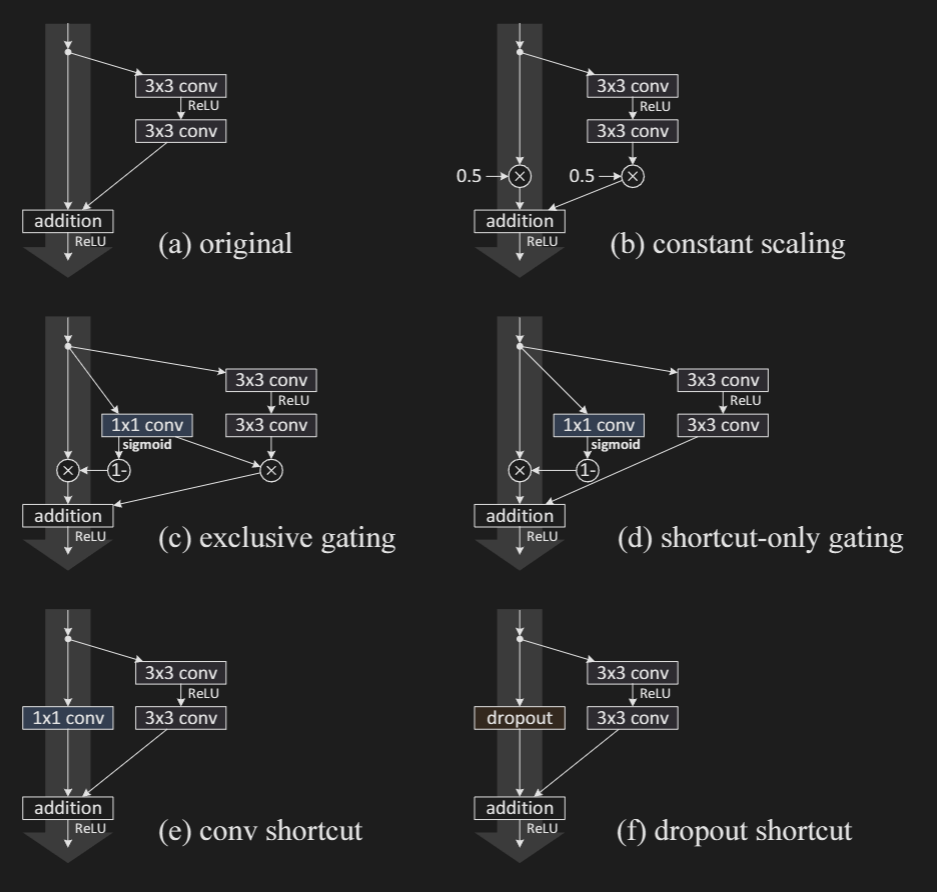

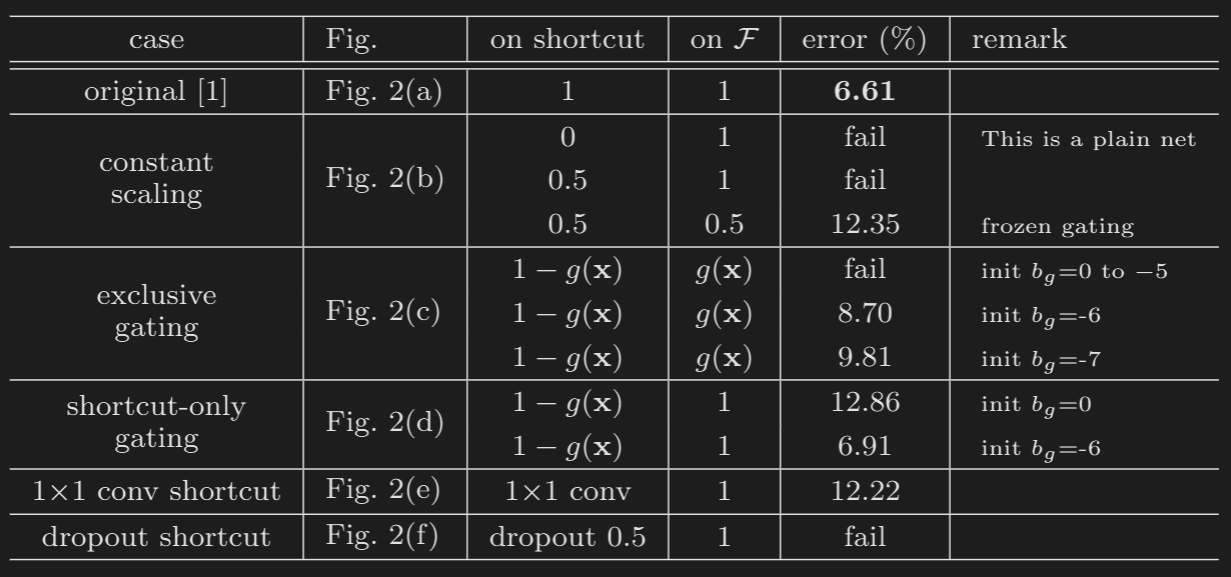

Experiments on Skip Connections

Constant Scaling:

In the case of Constant scaling, we simply multiply with as described above. The results are in the above table.

Exclusive Gating: We consider a gating function, where is a sigmoid. As seen in the above figure (c), the path is scaled by and the shortcut path is scaled by .

When approaches , the gated shortcut connection is closer to identity, which the gated shortcut connections are closer to identity which helps

information propagation; but in this case approaches and suppresses the function .

Short-cut only gating: In this case the path is not scaled and only the shortcut is path is sclaed by , where . The initialized value of is crucial in this case. When it is initialized to , the initial expectation of is (as expectation of is as it is passed through a sigmoid). The network converges to a poor result of 12.86 error percent.

But if is very negatively biased ( in this case), the value of is much closer to , preserving the identity mapping. We see the result closer to the baseline Resnet-110 with error percentage of .

Dropout: Dropout on the shortcut statistically imposes a scale of with expectation of . Thus, we see a vanishing of gradient, and it fails to learn.

Experiments on Activation Functions

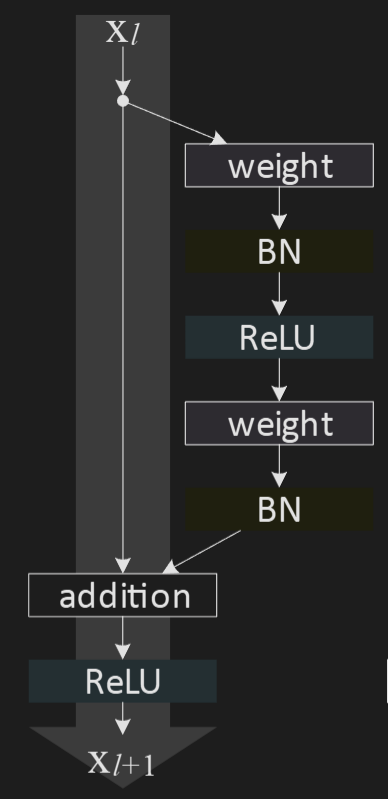

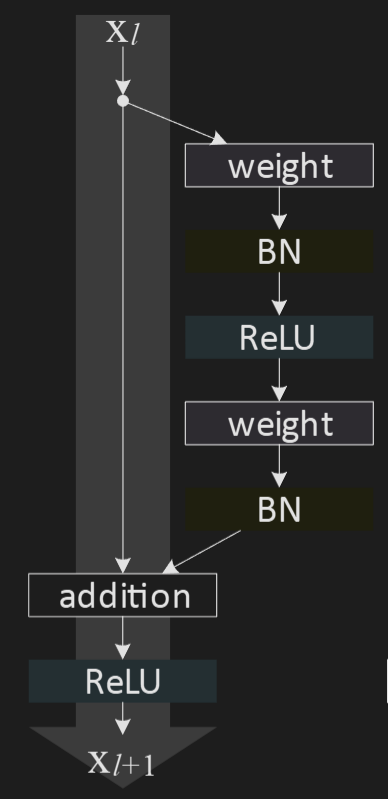

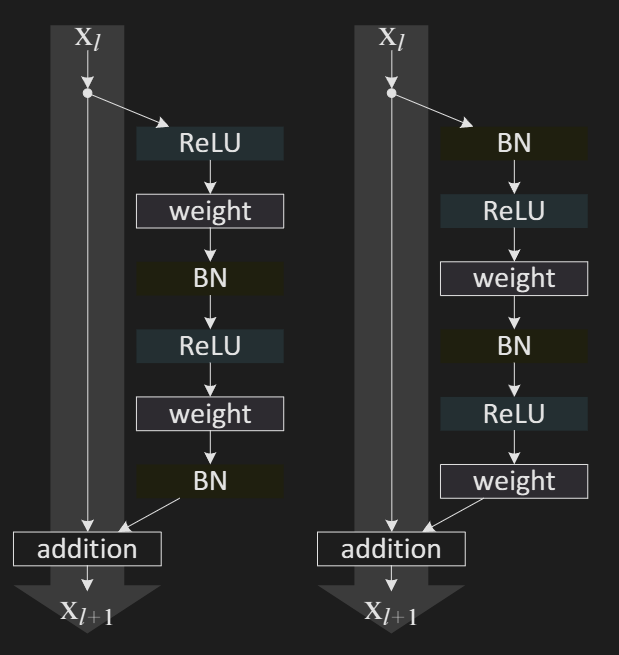

Throughout this section, this is considered as the original resnet block as presented in the original paper.

As theorised (and also seen in the earlier experiments), we need an identity mapping for . Before doing that, authors perform a few more experiments.

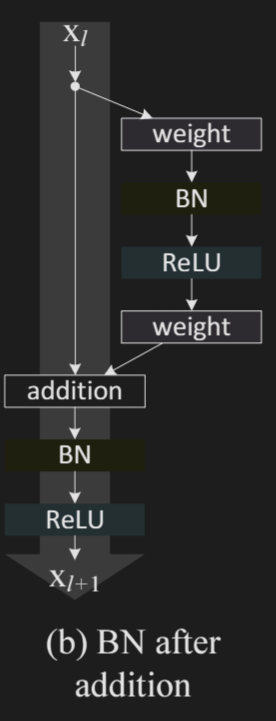

BN after addition

Authors use a batchnorm before addition as can be seen in the image. The resulting model performs worse than the baseline as batchnorm impedes signal.

ReLU before addition

By having a ReLU on the output of , we make the output strictly non-negative. As a result, the signal is monotonically increasing through the layers. This hurts the performance.

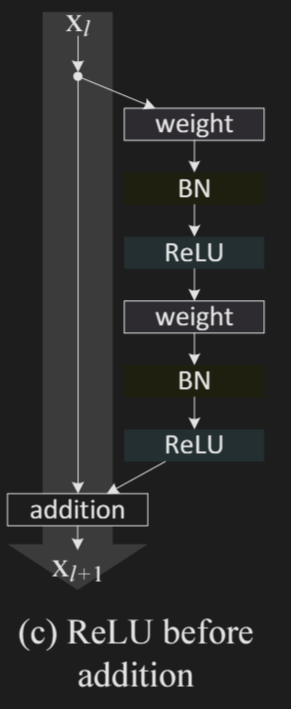

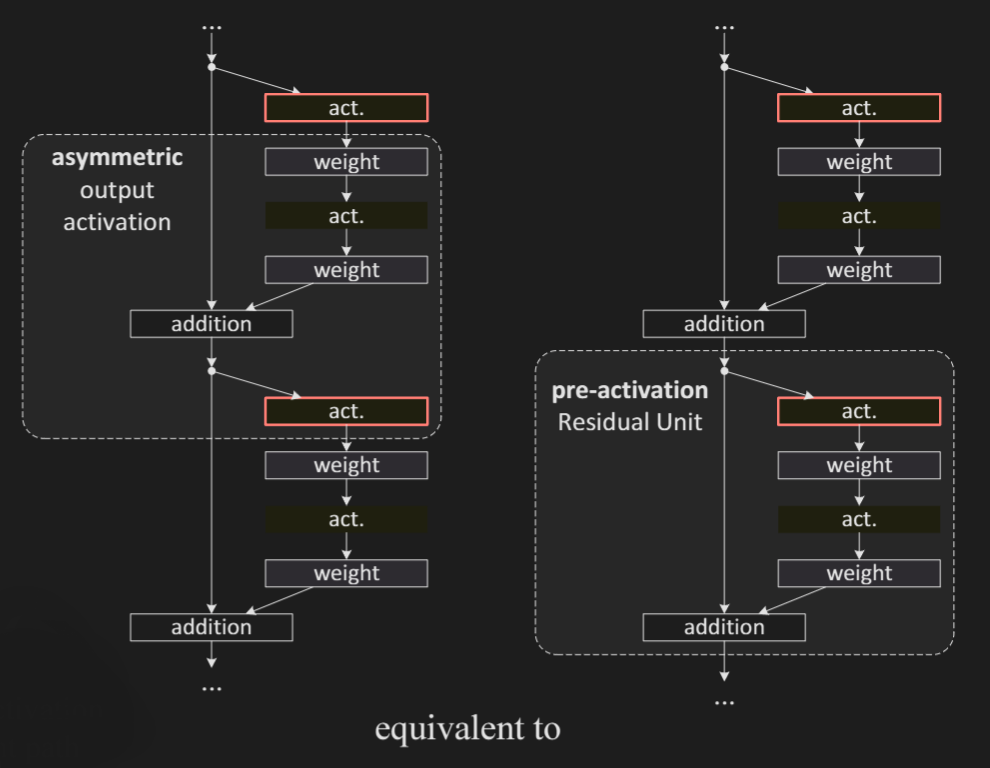

Post-activation vs pre-activation

Original architecture has an activation function post the addition of shortcut to the output of . From the flow of gradient that we have derived above, we can clearly see that having a ReLU post addition is not optimal.

So, instead of using the activation function on the skip-connection too, authors use an activation function asymetrically, only in . Thereby, the skip-connection is just an identity, without any activations on its way, and the above derived flow of the gradient holds.

Authors experiment with two such designs: (i) ReLU-only pre-activation, and (ii) full pre-activation where BN and ReLU are both adopted before weight layers. Results show that adopting both BN and ReLU leads to a better performance.

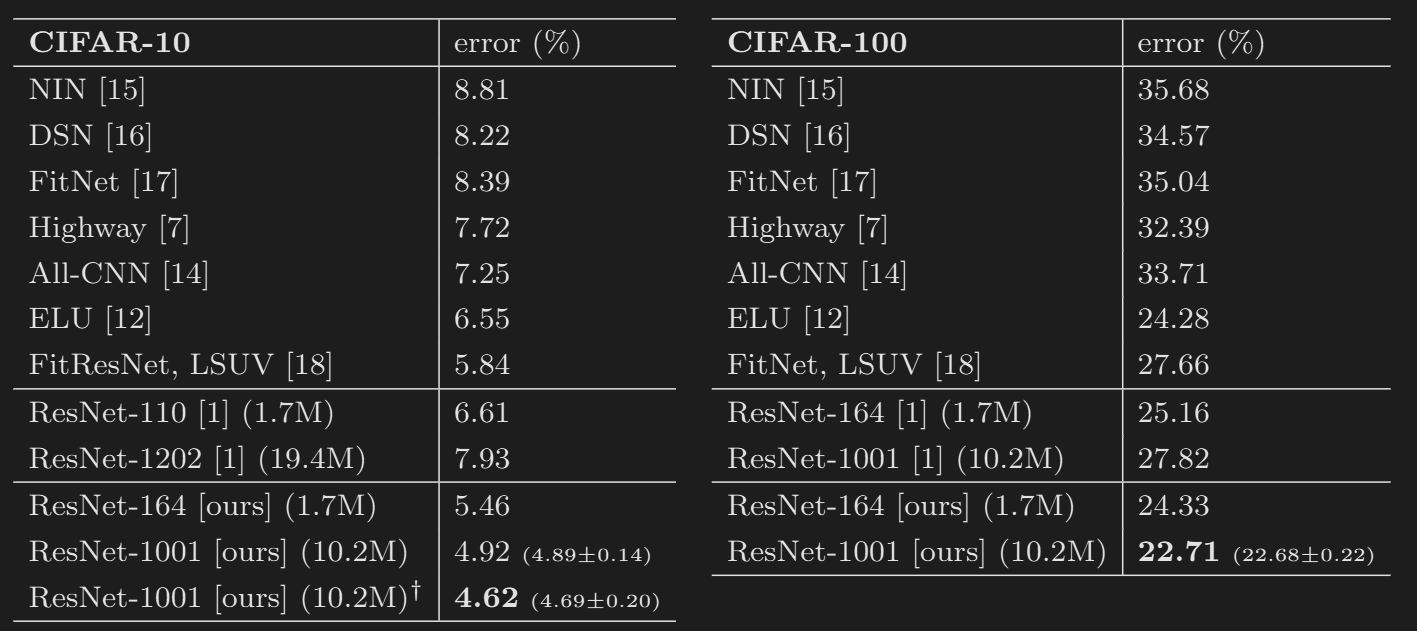

The newly proposed modification beats the original Resnet architecture.

Authors manage to train a Resnet-1001 with this modification. This new varient is easier to train.

They also claim that it helps in regularization and helps achieve a higher test-loss. Regularization is due to BN’s regularization effect. In the original design, after batch-norm, is immediately added to the shortcut and the resultant signal is not normalized. The new modification fixes that issue.