Paper: arXiv

Authors: Jiaming Ji, Boyuan Chen, Hantao Lou, Donghai Hong, Borong Zhang, Xuehai Pan, Juntao Dai, Tianyi Qiu, Yaodong Yang

Date of Publication: 4th February 2024

Overview

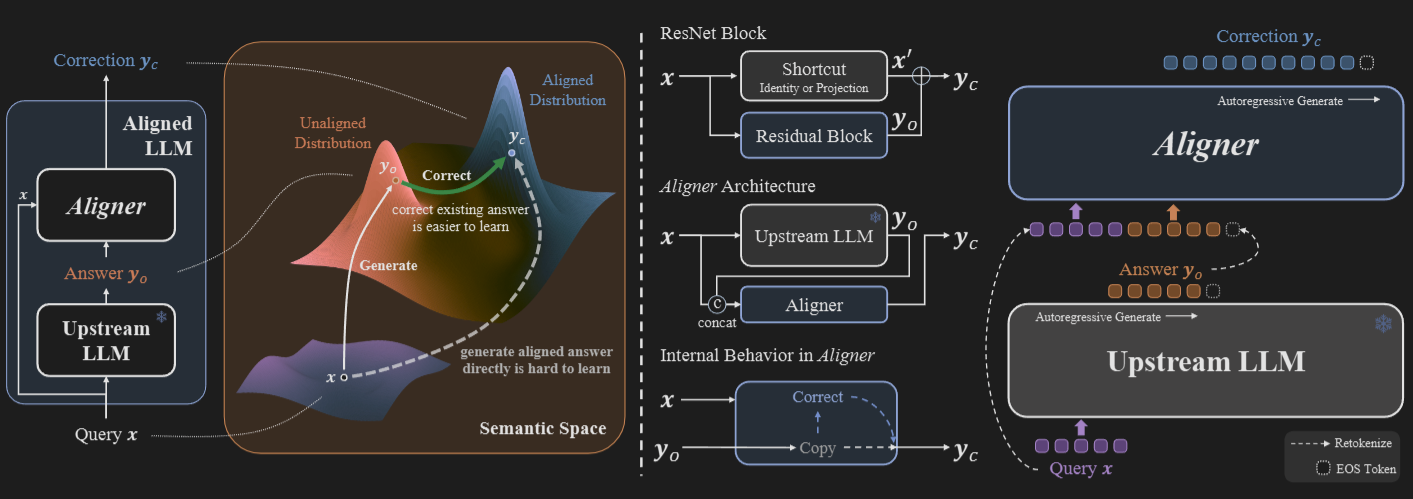

In the days of rapid development and deployment of LLMs, aligning them via RLHF/DPO is very time and resource consuming. To address that, authors introduce a new solution. An aligner (a decoder-only transformer) is trained once on RLHF/DPO dataset. And the trained aligner can be used to align any LLM without access to its parameters (so also works on models accessed via APIs).

The trained aligner takes user query and the base-model’s output as input, and conditioned on them, it predicts the aligned output to the user query. Authors find that aligner increase the base-model’s Helpfulness, Harmlessness and Honesty. It also reduces hallucinations and improves performance.

Aligner

Let the dataset , where is the user query, is the original answer, and is the correct answer according to established principles.

Aligner is a seq2seq model parameterized by , to redistribute the preliminary answers to the aligned answer .

Let be the base LLM that we need aligned. Let be the combined model (aligner on top of base LLM). Then,

We can get a lower-bound as,

So,

By Maximum Likelihood principle, we must minimise

The term is not dependent on aligner. It’s out base-LLM which we do not have access to modify its parameters.

So, the training objective is

Aligner takes in input the user query and the base-LLM generated answer, and outputs the aligned answer. Aligner learns to modify the unaligned answer to aligned answer rather than generating an aligned answer from scratch (reminiscent of Res-Nets). So number of parameters required for such Aligner is less when compared to base-LLMs and is very efficient to train. Train once, plug-and-play on any model.

One important point to note is that we must retokenize the query and base-LLM answer before passing it to the aligner. Aligner works for any model, so naturally it must come with its own tokenizer.

Aligner is first made to learn identity function in the “warm up” stage of it’s training. It’s trained with Query-Unaligned Answer-Unaligned Answer. Later once it learns identity, it’s trained with Query-Unaligned Answer-Aligned Answer.

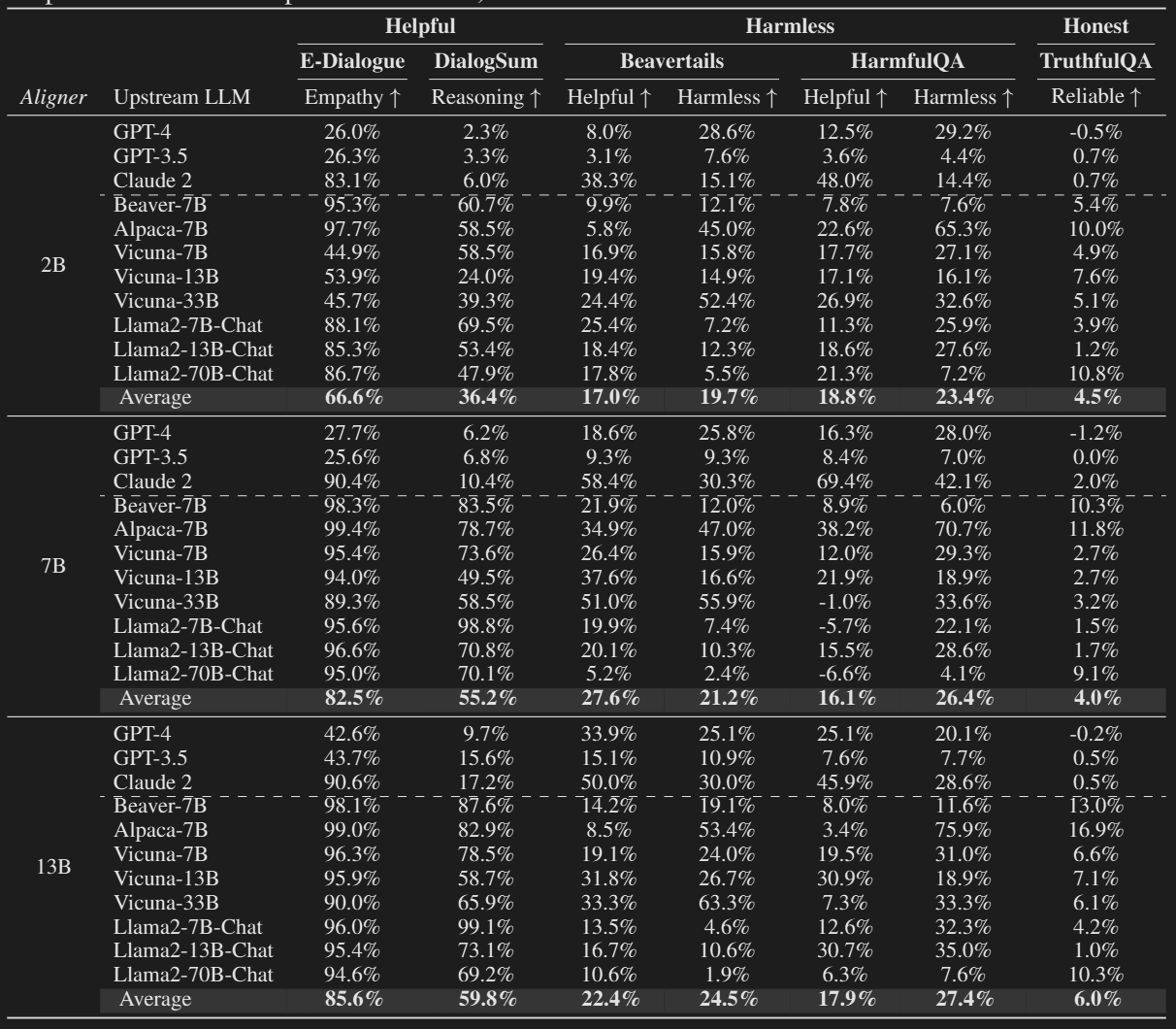

They use Gemma-2B and Llama2 (7B, 13B) models as aligners.

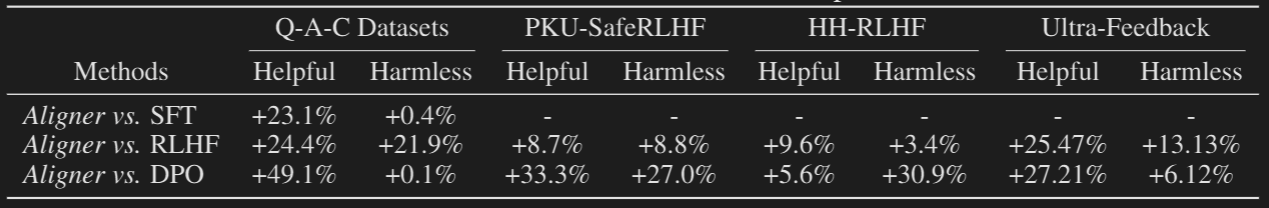

Aligners do pretty well in aligning even the LLMs that underwent RLHF/DPO.

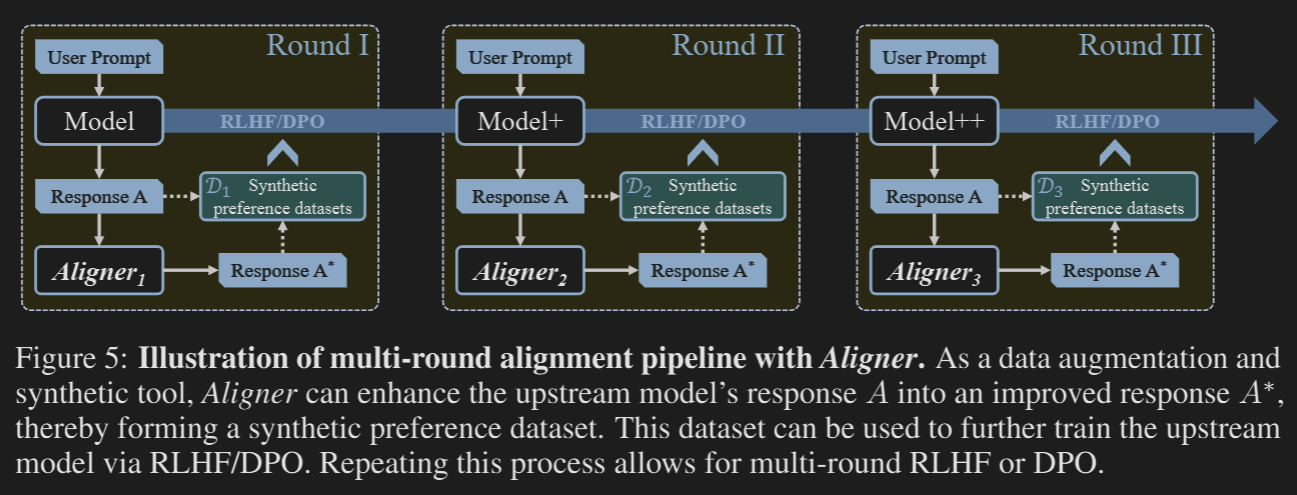

Multi-round RLHF training via Aligner

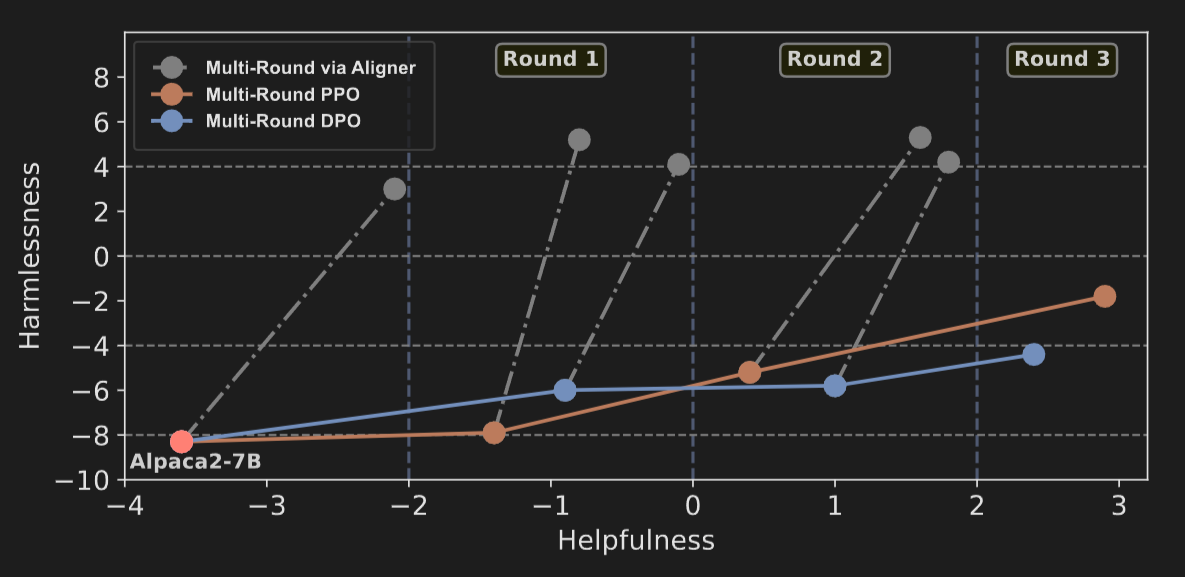

Typical multi-round RLHF may suffer from reward collapse. The preference dataset used to train reward model may deviate away from the base-model (policy) leading to poor rewards. This error accumulates over multiple rounds, leading to significant deviations in the model’s final result.

Aligner can be used to rectify this issue.

Aligner acts as a synthetic data generator, providing efficient way to construct preference dataset on the go, preventing reward collapse.

Authors examine the inference speed, output length of answers, performance on tasks like MMLU, MATH etc. and so much more in appendix of the paper. They provide many details, and it should be straight forward to replicate this paper.

Thoughts

I liked the paper. Really simple idea but it works well regardless. I don’t see this practically being used anywhere though. DPO/RLHF are just better in my opinion as we get an end to end model and way faster inference. I don’t like that we need to re-tokenize the query and the generated answer again before passing it to the aligner. Having a single tokenizer itself causes so many issues. As the saying goes, “tokenization is the root of all evil”.