Paper: arXiv

Authors: Yide Shentu, Philipp Wu, Aravind Rajeswaran, Pieter Abbeel

Date of Publication: 8th May 2024

Overview

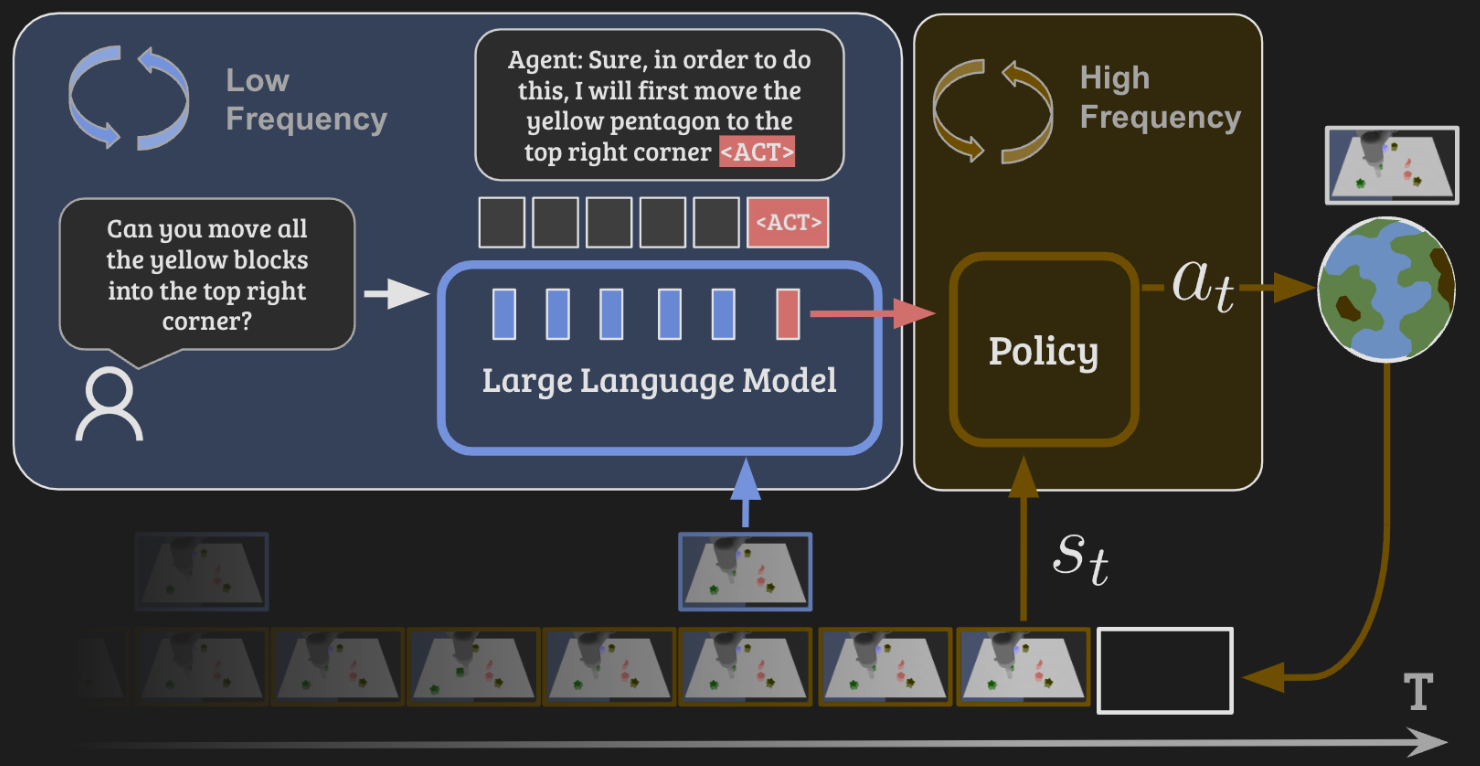

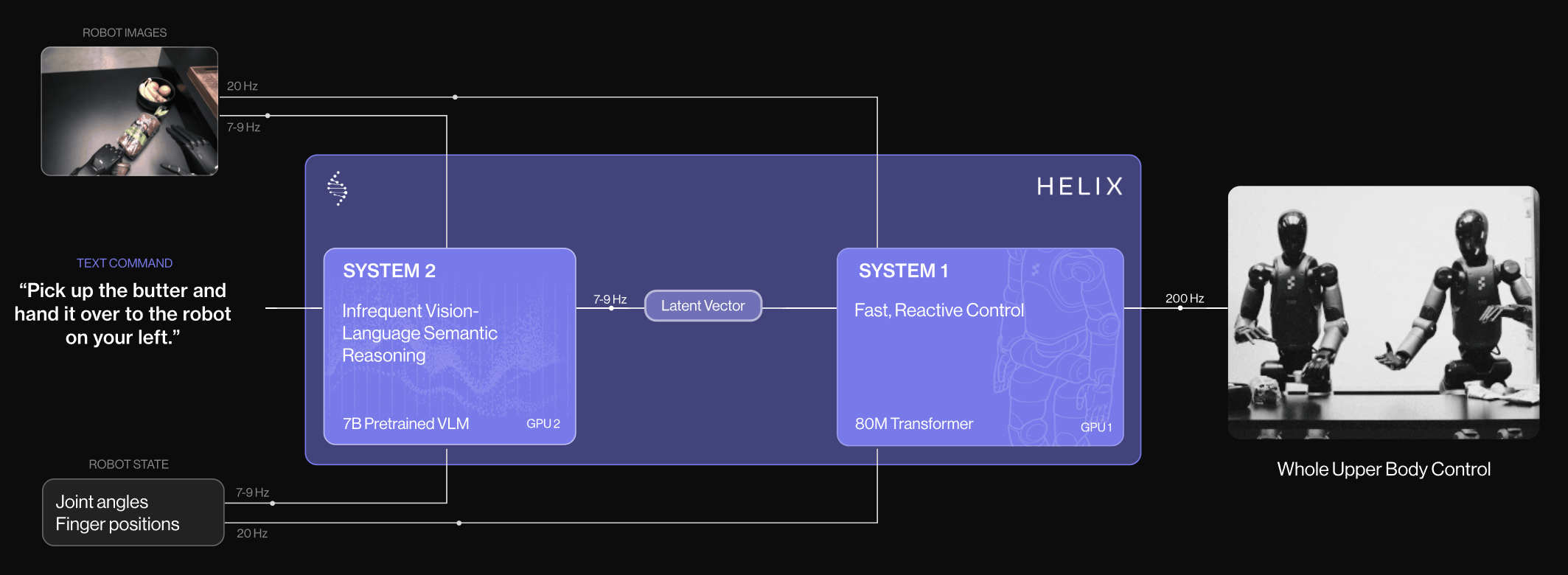

This paper breakdown is due to recently demoed Figure1 robot’s Helix AI. Helix AI uses a very similar method to control the robot. In this framework, a multimodal LLM along with a policy work together to complete tasks in a world. LLM is prompted with the task in natural language, along with a picture of the environment. LLM reasons through and breaks down the goal and at last predicts token. Authors use the activations of the in the last layer and feed it to a policy. Policy conditioned on the embedding, takes action in the environment. Policy is fast, and is run at a high frequency, but LLM is expensive and is only used to reason through and predict the token.

Methods

Let us first explore what other methods are commonly used to make an AI solve tasks in an environment:

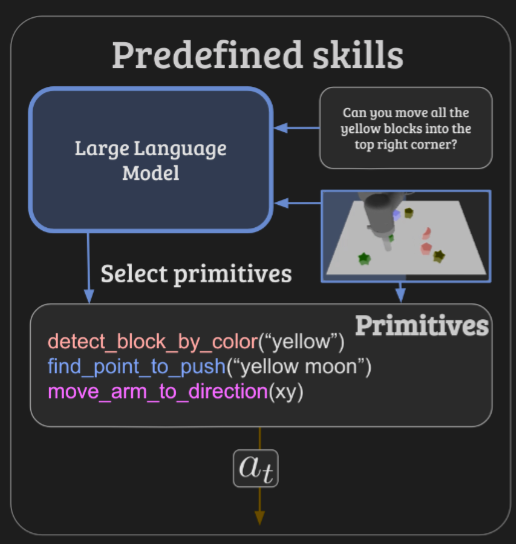

LLMs Leveraging Predefined Skills

Just multi-modal LLMs can be prompted with the task. They can be provided with few functions/APIs to call upon. LLM can reason through the task, and call the required function/API and complete the task. But for an LLM to take a particular action, that action requires semantics attached to them that make linguistic sense, there are many actions which are difficult to describe in a language. Also, an LLM must first be provided with a extensive and pre-determined vocabulary of functions that it can call upon. LLM can’t generalise to other tasks which are not in the vocabulary, and designing the vocabulary is difficult and labour-intensive.

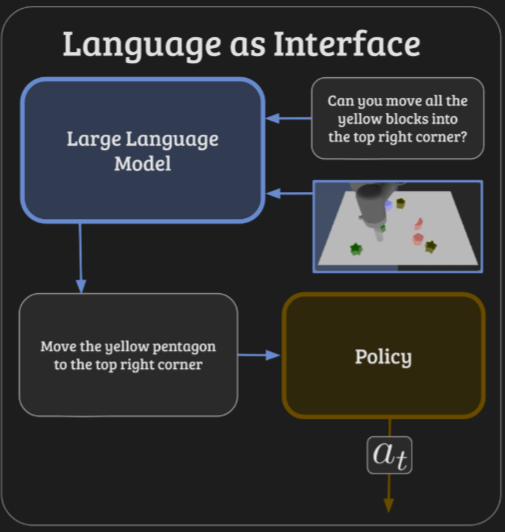

Language as Interface

This class of methods condition the policy on the natural language output of the LLM. LLM simply takes the task input from the user, breaks it down into simple low-level tasks. A policy conditioned on the output of the LLM takes action in the environment. But not all high-level tasks are easily decomposable into low-level tasks using natural language. Imagine trying to describe step-by-step instructions to make a robot dance to a song.

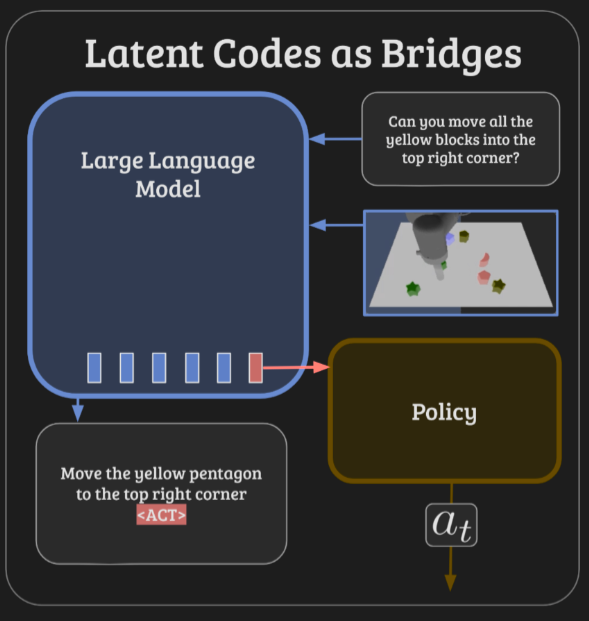

Latent Codes as a Bridge (LCB)

In this paper, LLM takes input a high-level description of the task, and reasons through, breaks it down it simple low-level tasks. But instead of feeding the natural language output to the policy, LLM is made to predict the token . The embedding in the residual space of the last layer of this token is projected to the same dimension as policy’s latent space, and is concatenated with the observations from the environment. Policy is expected to take required actions.

This is end-to-end trainable. This does not have the limitations that the above specified methods have.

Architecture

Let the pretrained LLM be , let the policy be , parameterized by and respectively.

Authors use LLaVA as their pre-trained multi-modal LLM. takes input and as input, and outputs .

Embedding of is projected into ‘s latent space to obtain . takes input and (observation at time ).

They optimize the following loss:

depends on the pre-trained policy and can be anything in-principle.

is the auto-regressive next-token prediction.

, where is the CLIP embedding of . By making sure the predicted embedding is similar to the LLM’s ouput text embedding, it acts like a regulariser.

Results

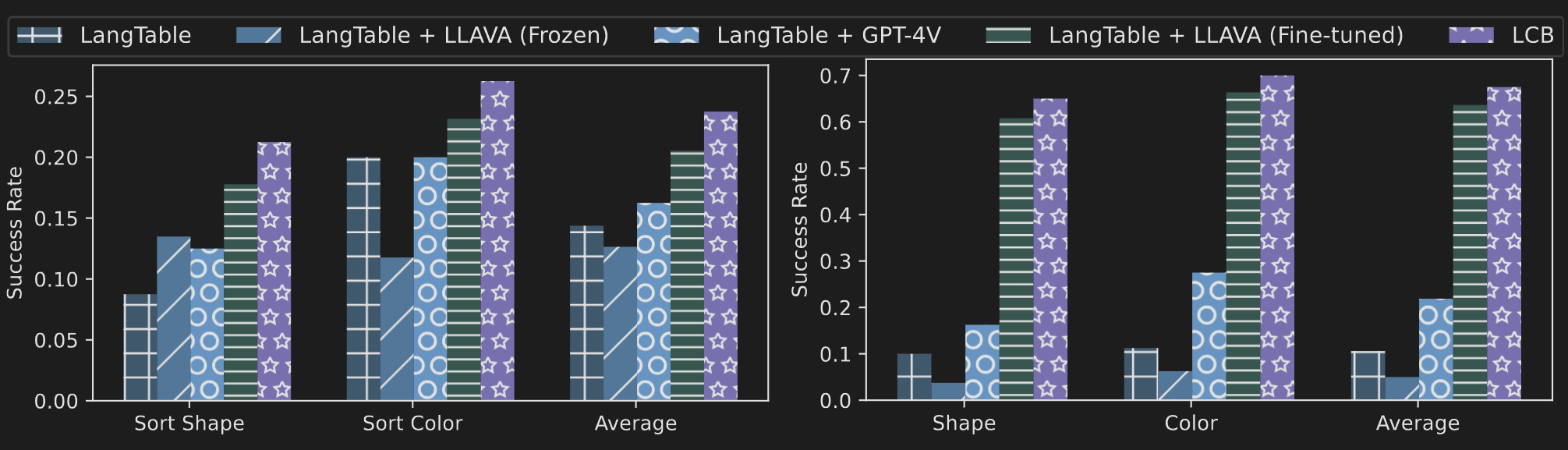

They evaluate LCB primarily on 2 benchmarks.

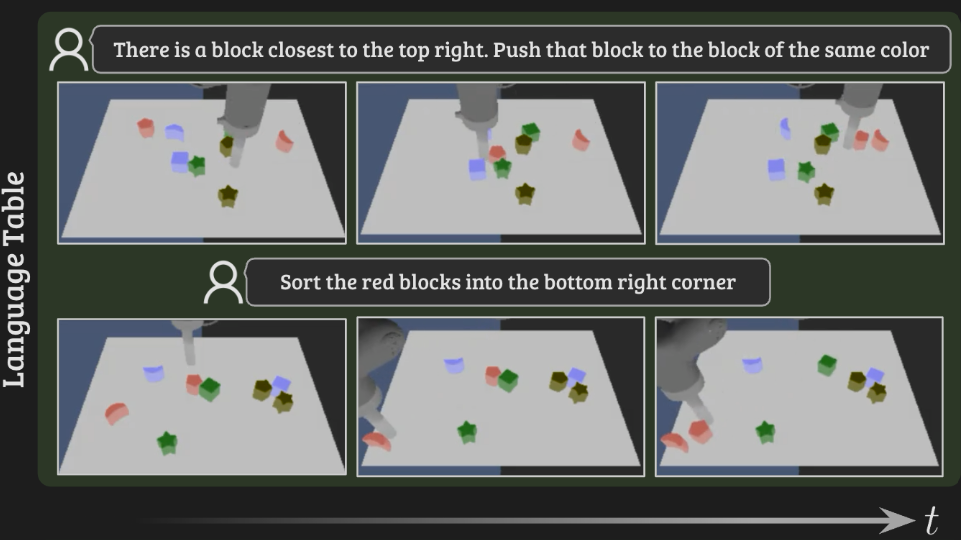

Evaluation on Language Table

Language Table offers a simulated tabletop environment for executing language-conditioned manipulation tasks. The environment features a flat surface populated with blocks of various colours and shapes, alongside a robot with a 2D action space. Language Table provides observations in the form of the robot end-effector position and third-person camera images.

There is a pre-trained policy available to solve the Language Table task. Authors use that as their base policy and finetune.

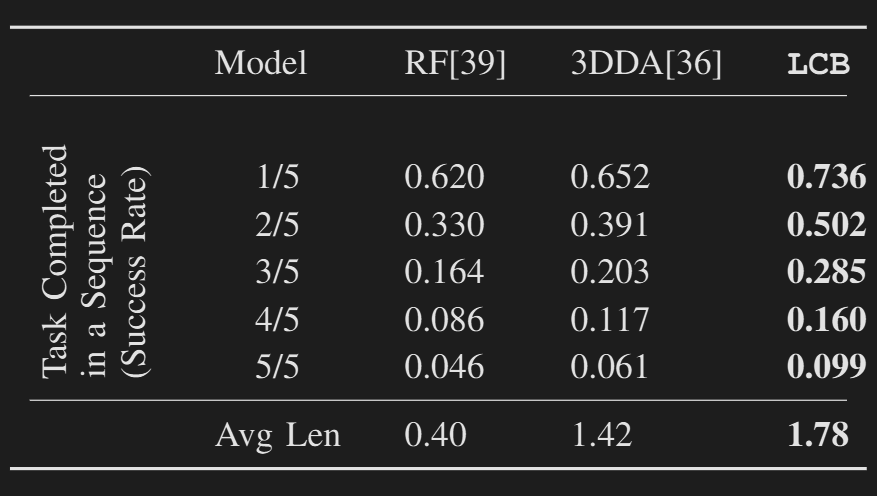

Evaluation on CALVIN

CALVIN is another such task, but focused on long horizon tasks.

Thoughts

I think the loss function can be improved a little. They could have added this term:

I think by explicitly having another loss term focusing on token should help learn a bit faster.

Pretty nice paper though.

If you haven’t seen the Figure’s Helix AI.

Literally the same architecture. I don’t think the 2 Robots in the demo are communicating. It’s just the same neural network running separately in both of them. The model can just generalise to such small collaborative tasks without any explicit communication. It should falter when models explicitly require communication and extensive collaboration. Maybe soon, they’ll run another small LLM on the output of the big LLM, to summarize it and speak out the necessary thoughts.