Paper: arXiv

Code: GitHub

Authors: Clément Bonnet, Matthew V Macfarlane

Date of Publication: 13th November 2024

Overview

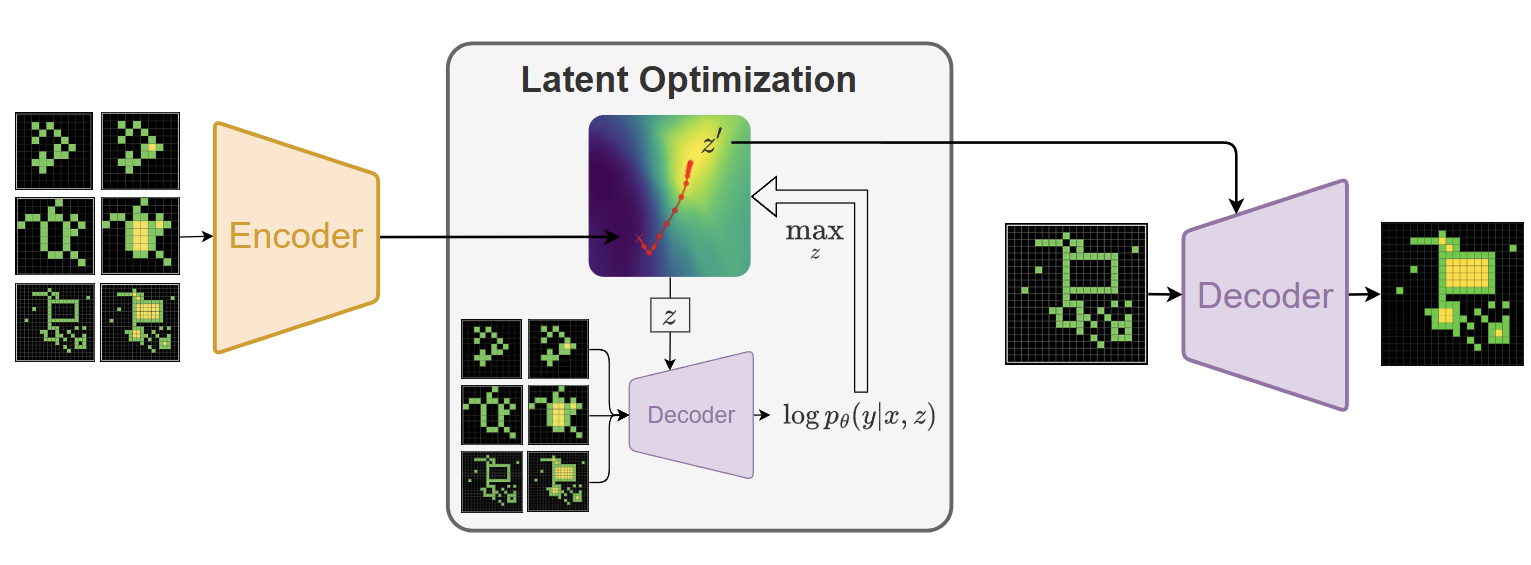

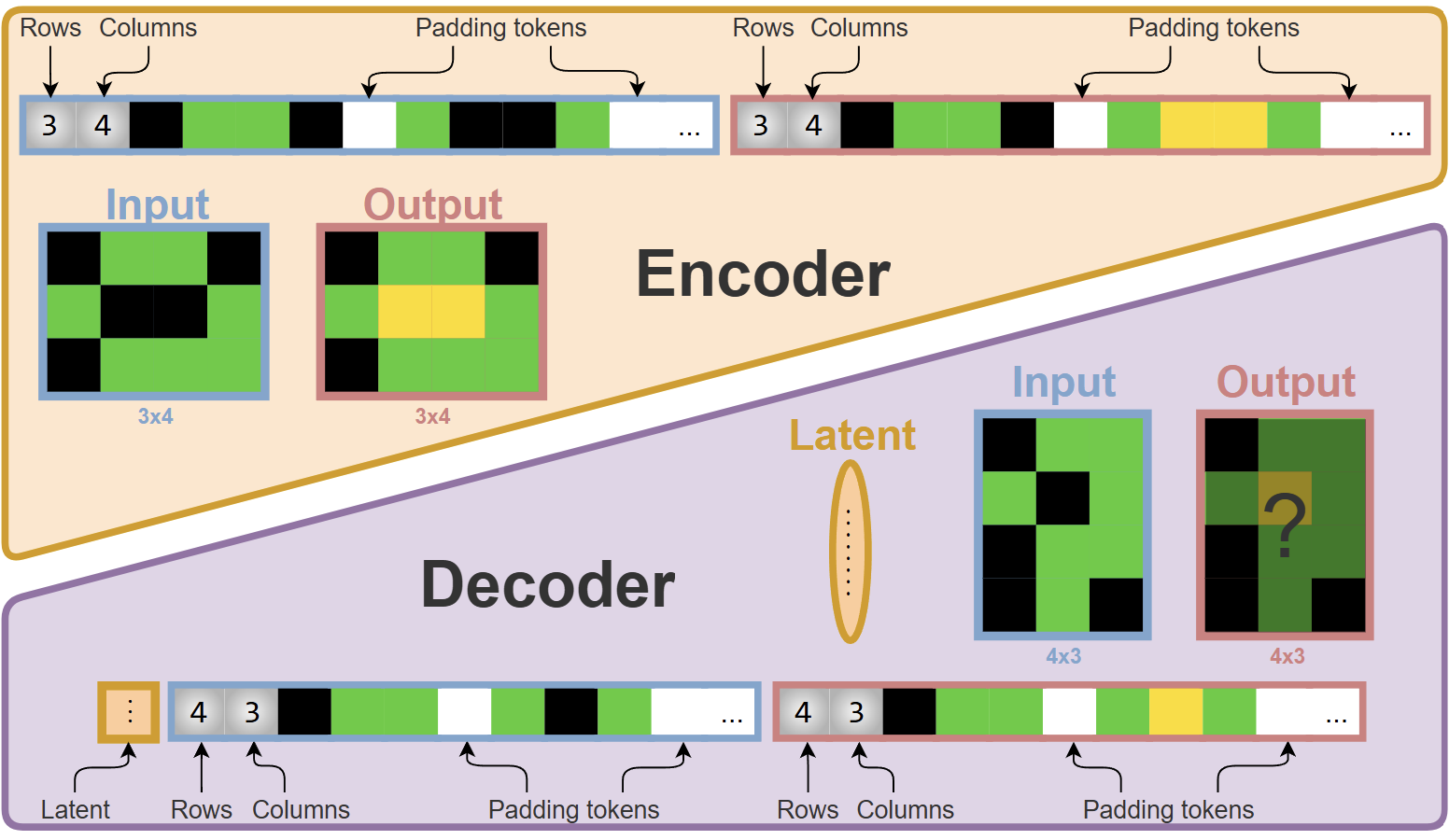

In this paper authors use program synthesis using Deep Learning to tackle ARC-AGI. Given a set of inputs and outputs, program synthesis aims to create/generate a program (or function) that transforms the input to the output. Given input and output pairs, authors use an encoder to map them into a latent space. The latent space is the distribution of all programs that explain the transformation form input to the output. Authors then sample from the latent space, and pass the latent variable to the decoder. The decoder takes input the new test input along with the latent vector. Decoder auto-regressively generates the required test output.

Authors also use few methods to search through the latent space to use the most optimal latent variable possible too.

Background

Program Synthesis

Let be the set of few input/output pairs (the task to be solved)

A program is considered to solve the task associated with if it satisfies:

Let represent the true function that generated the Input-Output pairs. In this paper authors explore the problem where we are given a task , along with an additional input :

Goal is not to explain the generating function but to find a program that generalises to the new additional input . ARC-AGI falls exactly in this category.

Variational Auto-Encoders

In the framework of VQ-VAE, given i.i.d samples from a dataset, we try to infer , where represents the latent variable. We try to find the latent variable that explains the input samples. Latent variable is usually in a much lower dimension than the input. Since is generally intractable, we approximate it using a neural network, . We maximize the evidence lower bound (ELBO):

By maximising the lower-bound, the log-likelihood increases. The first term in ELBO captures the likelihood of reconstructing from the latent variable . The KL divergence term regularizes to be close to the prior distribution (usually Gaussian). So given an input , using the neural network we predict , and using a decoder we reconstruct the input . We train it by maximising the ELBO as shown above. is a prior distribution chosen for .

Latent Program Network (LPN)

In this paper authors introduce Latent Program Network, an algorithm that trains a neural network end-to-end to take a specification of input-output pairs and generate output of newly given input.

Latent Program Inference

LPN consists of an Encoder and a Decoder which play a similar role as in VAE.

Encoder

The encoder is trained to predict the latent variable given the set of I/O pairs. It maps an input-output pair to a distribution in the latent space . The distribution in the latent space represents all possible programs that could explain the transformation of input to the output. The encoder is trained to learn an abstract representation of programs in a continuous latent space.

In practice, they use a multivariate Gaussian distribution to model the latent space. Encoder predicts the mean and a diagonal covariance matrix of the Gaussian distribution to sample the latent variable from.

Decoder

The decoder is responsible for mapping the latent variable sampled from the distribution predicted by the encoder, and the given input to an output distribution of all possible outputs , . Even though the mapping between I/O pairs is deterministic, they use a probabilistic framework so that it is compatible with maximum likelihood learning.

Conditioning the decoder on different points of the latent space leads to different outputs being generated.

Latent Optimization

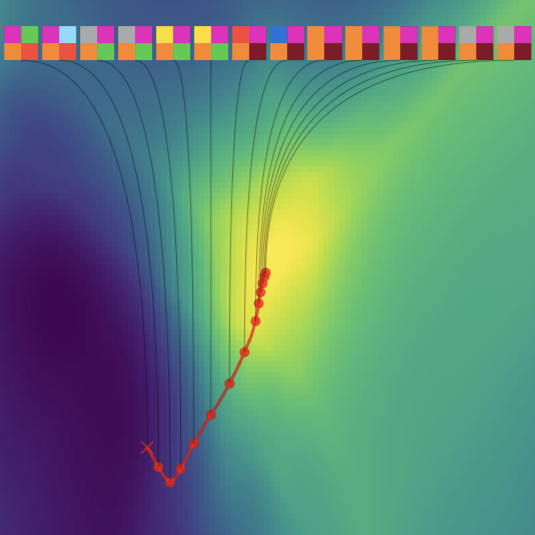

But there is a problem that needs to be addressed. Usually the latent variable predicted by the encoder is just not good enough. It may not encode the correct high level details necessary to explain the I/O transformation. Especially if the task is very novel, the encoder may fail at producing the right latent program, which, fed to the decoder, would generate the wrong output. Therefore, authors include a stage wherein they optimize the latent variable . Starting from the encoder’s prediction , they carry out a search to find a better latent . This is analogous to system 1/system 2 thinking. Encoder first generating an initial guess is analogous to system 1 thinking, while the search process to find a better is analogous to system 2 thinking.

Search Methods for Latent Optimization

Given input-output pairs , the search process attempts to find that satisfies:

This means we need to search for the latent that would most likely make the decoder generate the right outputs given the corresponding inputs. By finding a latent that can explain all the input-output pairs, the latent solution to the optimization problem is more likely to generalise to a new input-output pair.

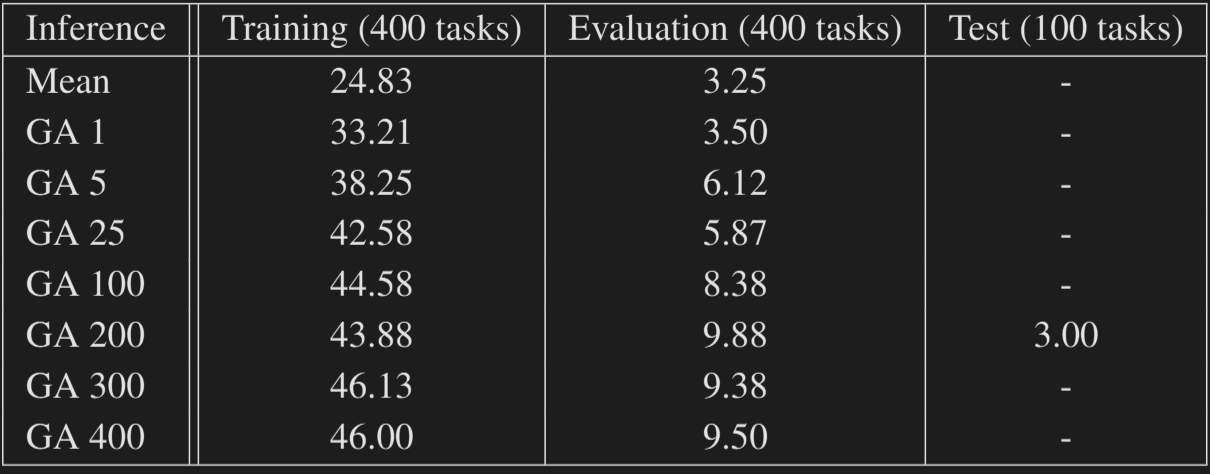

Authors use two methods of search, namely Random Search and Gradient Ascent

Random Search

Authors sample latent variables from either or and select the latent that gives the highest log-likelihood of the input-output pairs according to the decoder.

Random search asymptotically converges to the true maximum likelihood latent. However, the efficiency of random search decreases as the dimensions of the latent space increase. But it can still be used when the decoder is non-differentiable.

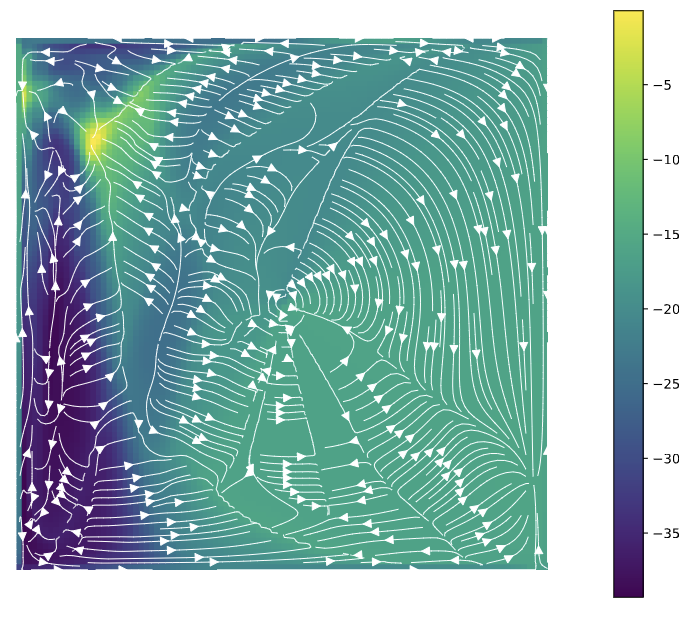

Gradient Ascent

Since the decoder is a differentiable neural network, it’s log-likelihood, is also differentiable with respect to . So we can use gradient-ascent to search through the that maximises the log-likelihood.

In practice, they use the best found latent in the search process, which may not always be the last latent .

Gradient field of the decoder log-likelihood

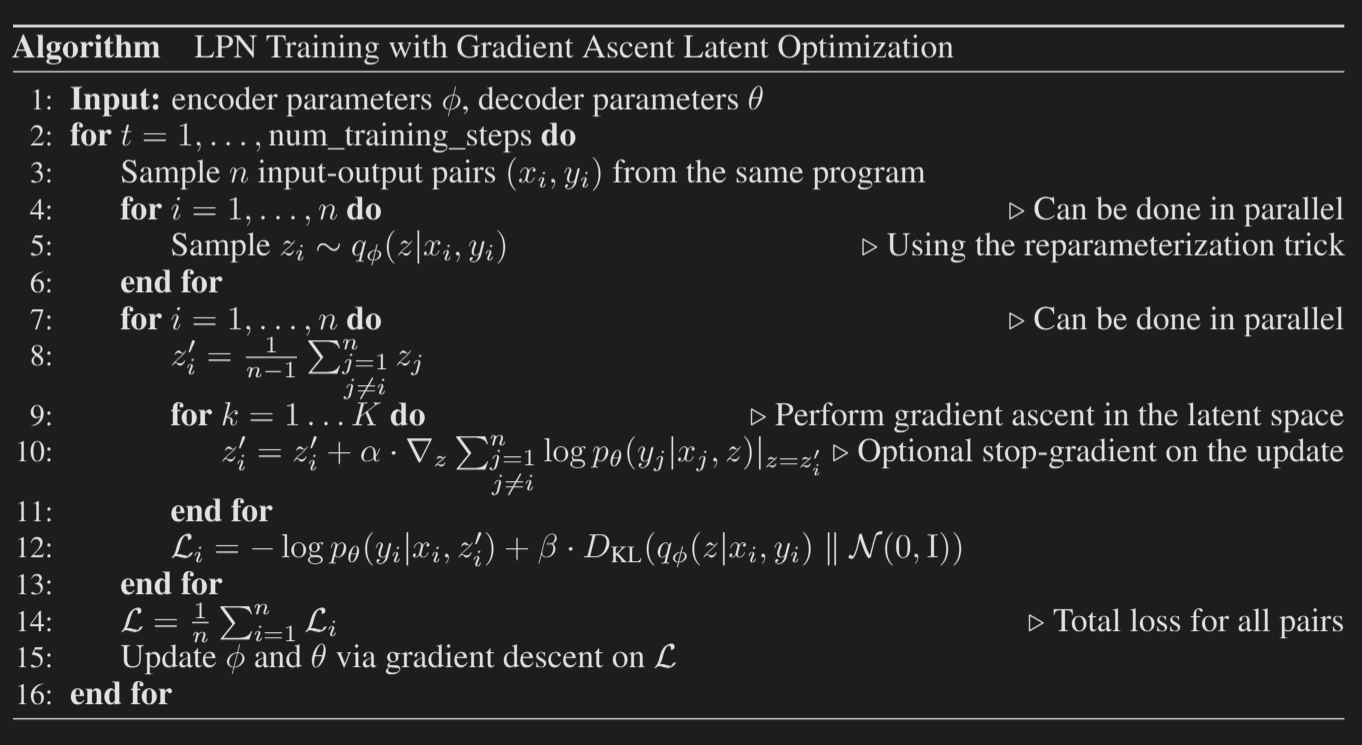

Training

To train an LPN system end-to-end, authors assume to have a dataset like ARC-AGI, consisting of input-output pairs generated by the same program. To simulate the test-conditions of predicting a new input, authors design the training procedure to reconstruct each of the outputs from their inputs and all the other input-output pairs . Authors do not use while predicting . Every other input-output pair along with is used.

When constructing , authors first sample latents from the encoder for all . Then they aggregate them by computing their mean, , then they perform gradient-ascent on to obtain . Finally, they compute negative log-likelihood of the right output using corresponding input and the refined latent . That is, they use cross-entropy loss of the decoder logits using the label .

Losses used are:

They use the standard re-parameterization trick while training.

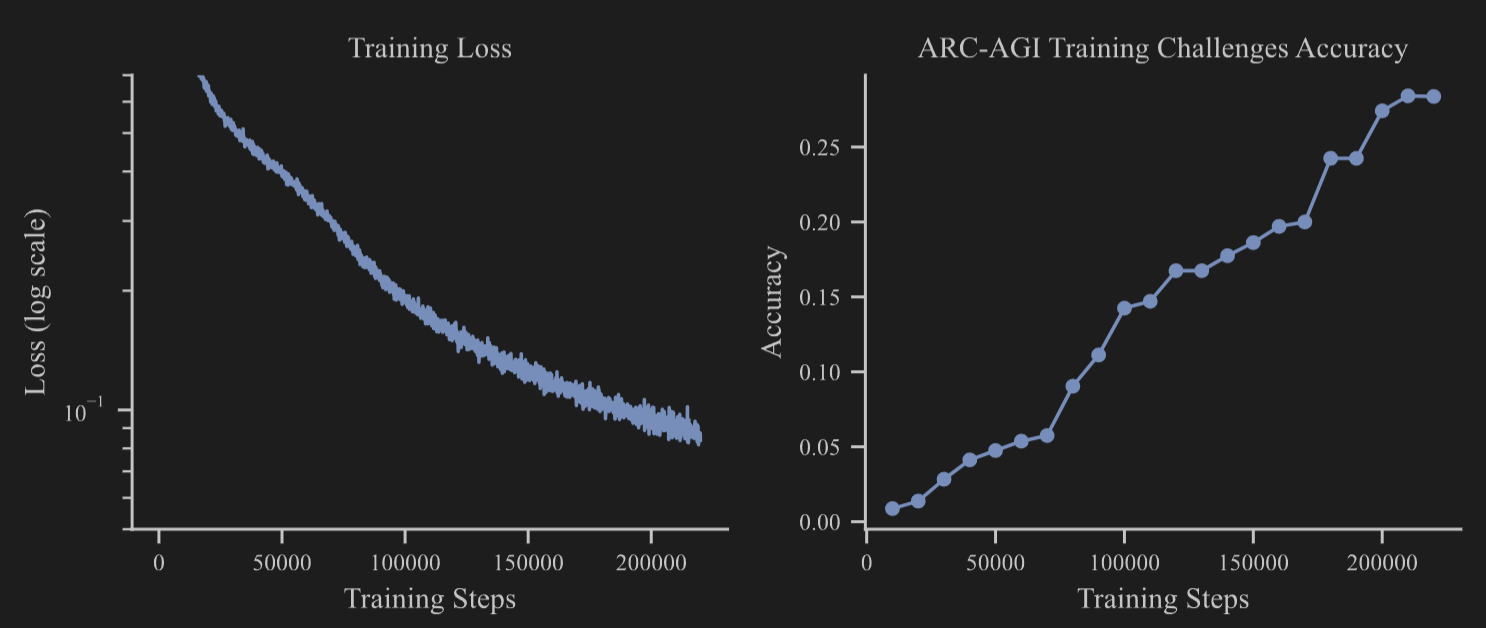

Training with gradient-ascent to optimize the latent is significantly more expensive. So authors use only 0-5 steps of gradient ascent steps while training, but during test-time we can use increased number of gradient ascent steps as needed.

ARC-AGI Experiments

The Architecture

They use standard encoder-only transformer for encoder and decoder-only transformer for auto-regressive decoding. Authors don’t use any pre-trained models and train only on the ARC-AGI train dataset.

If you are keen on minute implementation details, authors have open-sourced their code. They also provide extensive details in the paper.

Although the performance is very bad on the evaluation/validation set. They used GA200 while testing on the private test-set and it scored 3% only. Authors claim that the loss of the model has not converged yet and the model can further be improved but they were lacking the compute needed.

LPN fails to generalise to new tasks as we've seen from the ARC-AGI scores, but it atleast learns the tasks that it was trained on. It manages to learn and execute 180 programs of the ARC-AGI training set.

Thoughts

I really liked the searching in the latent space. But in this setting, we need a loss function that we can optimise during test-time. This is perfect for ARC-AGI, but I don’t know how we can extend this set-up towards broad tasks that we expect AGI to do. We can simply scale up test-time compute using LLMs as done in OpenAI-o1 like models to carry out the search process in general domains without being restricted to narrow tasks.

Clément Bonnet currently works in Ndea, a company founded by François Chollet to research Deep Learning guided program synthesis. I’m looking forward to research in this direction. It looks very promising nonetheless.