Paper: arXiv

Authors: Nikunj Saunshi, Nishanth Dikkala, Zhiyuan Li, Sanjiv Kumar, Sashank J. Reddi

Date of Publication: 24th February 2025

Overview

In this paper authors perform extensive comparison between looped model and non-looped model. In a looped model, a small model with layers are looped times to have an effective depth of layers. A non-looped model has layers without any looping.

Authors find that looping a model effectively increases its performance on downstream reasoning tasks like math. The performance on math is comparable to that of a non-looped model, while taking having a less parameter count. They also find that looping a model does not get the same gains in memorisation based tasks and the perplexity of looped model is way worse than non-looped model. To fix these issues, they intorduce a new regularisation strategy whose aim is to offer better reasoning while maintaing a good perplexity.

They also show extensive theoretical results proving equivalaence between chain of thought and model looping.

Looped Model

Authors use the basic loop models. Let be a model of k layers. Let be the model looped times, i.e. . Let denote the model with layers looped over times. So their effective depth would be .

Experiments of Looped Models on Simple Reasoning Tasks

n-ary addition

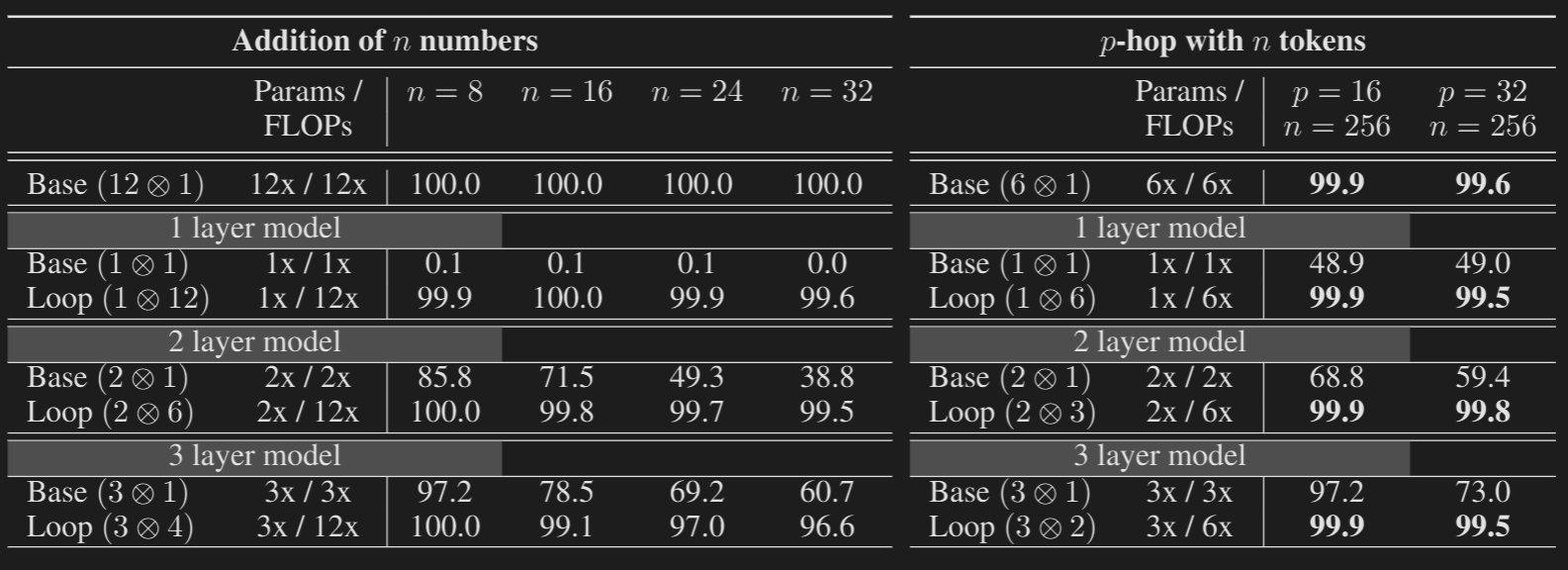

Authors consider the problem of adding , 3-digit numbers. The results are presented in the table below:

Baseline is the model 12 unique layers and with no looping. Model with 1 layer looped over 12 times performs the same as the 12 layered model with 99.9%, while without looping the accuracy is 0.1%. We can see the same trend in other variations also.

Authors claim that for reasoning tasks, effective depth is much more important than parameter count, and n-ary addition is a popular task to test. The results do support authors’ claims.

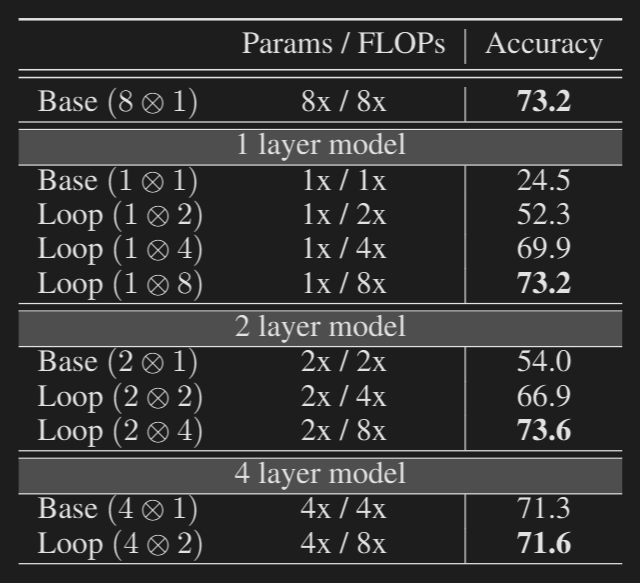

i-GSM (Synthetic Grade School Math Problems)

Authors constructed this synthetic test to verify their claims. Below is an example question. Its self-explanatory:

Question:

E#I := 4. E#J := E#I. K#N := I#N + J#O + F#K. F#K := E#J. J#O := F#K + K#O + E#J. H#J := E#J + F#K. I#P := L#M + I#N + K#O. I#M := J#O + J#P + F#K. J#P := H#J - F#K. L#M := I#N + J#P + F#K. I#N := 2 * J#P + H#J + E#I. K#O := J#P + I#N + E#J. I#P?

Answer with CoT:

E#I = 4. E#I = 4. E#J = E#I. E#J = 4. F#K = E#J. F#K = 4. H#J = E#J+F#K. H#J = 1. J#P = H#J-F#K. J#P = 4. I#N = 2J#P+2H#J+2E#I. I#N = 4. L#M = I#N+J#P+F#K. L#M = 5. K#O = J#P+I#N+E#J. K#O = 5. I#P = L#M+I#N+K#O. I#P = 0.

The trend continues, looped models with same effective depth match the performance with non-looped models, while taking a less parameters (the flops spent per forward pass is the same).

Authors also perform tests on ‘p-hop induction’ task and the trend continues in that too.

Language Modelling with Looped Models

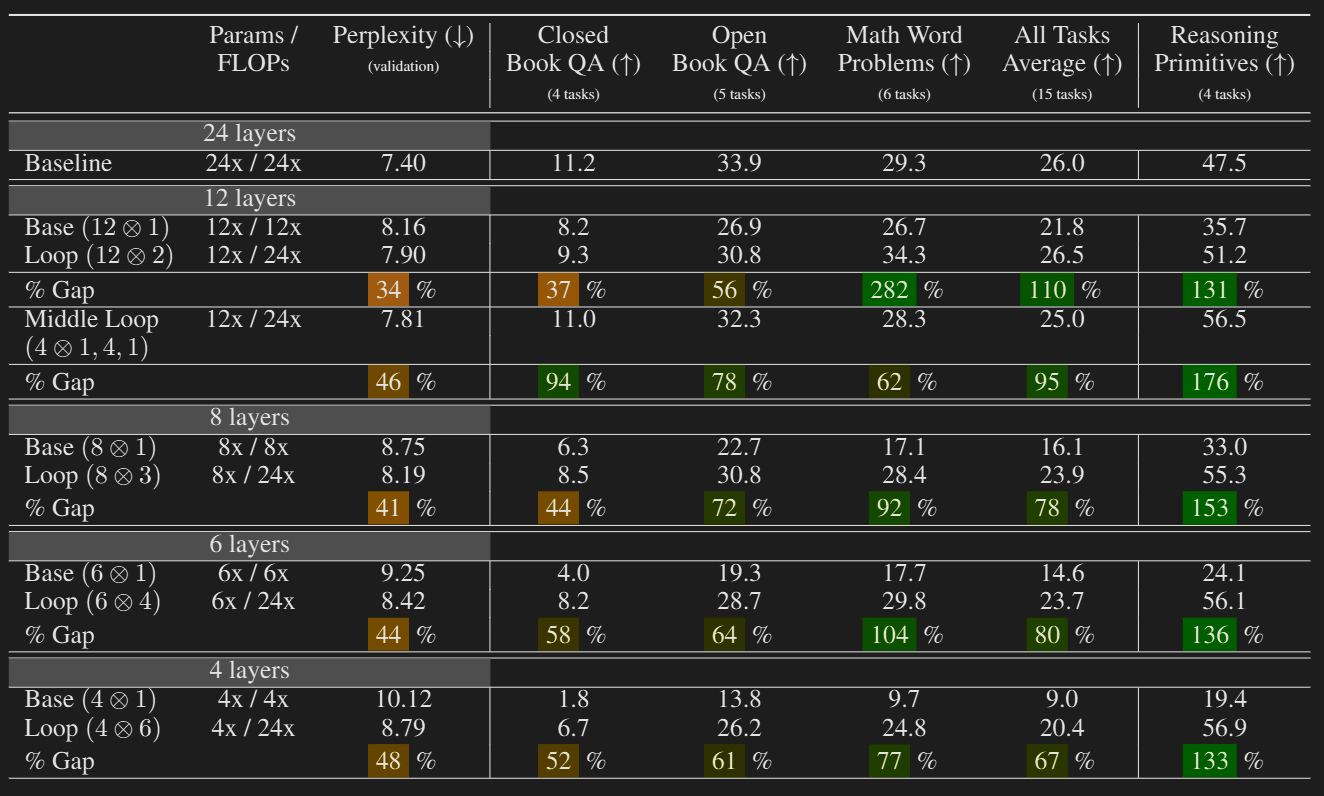

Authors pre-train a model with 24 layers. That is the baseline. The baseline model was compared to: (1) stand-alone models with 12, 8, 6, and 4 layers; and (2) those same 12, 8, 6, and 4 layer models looped to match the 24-layer depth of the baseline. They pre-train all these models.

They find that looping does not match the same performance when measured by perplexity. They claim that number of parameters is an important metric in Next Token Prediction as it needs some memorisation. Fewer parameters = less memorisation and the models generally do poorly when compared to the baseline. They also test these models on various downstream tasks. The results show that looped models often match the performance with the baseline in reasoning tasks (the tasks which do not need much memorisation), but they fall short in tasks which involves memorisation.

Closed and Open QA tasks involve some memorisation of facts and the looped models do almost the same as their non-looped variant. They are no-where close to 24-layer baseline. But when it comes to reasoning tasks, they are comparable to the base-line while having at least less than half the parameter count as the base-line.

They also try out middle looping. They keep the first and last layer as it is and loop the middle layers. The results are in the table above. It does better than regular looping in QA tasks but fall short in Math. Authors do not explore this and leave it for future works.

Scaling Behavior of looping

They find that as effective depth increases, the task performance increases. It doesn’t matter if the effective depth is via looping or without any looping. We get logarithmic improvements as we scale up the effective depth.

They model accuracy on a downstream task as:

where is a scalar that measures the impact of depth (D) on the downstream task accuracy.

They define to assess the relative impact of looping on the downstream task. They find that for reasoning tasks, the realtive impact of depth via looping is equal or better than raw depth.

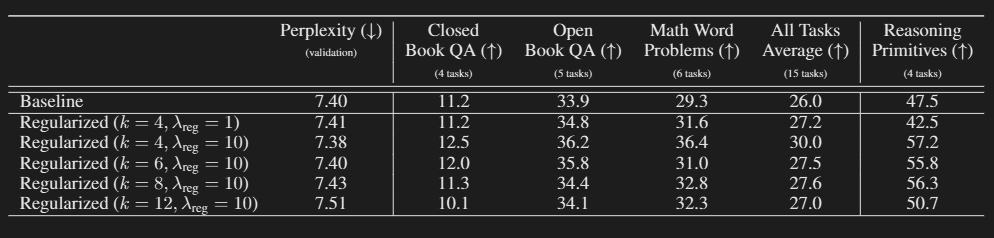

Looping inspired regularization

Now we know that looping increases performance on reasoning tasks but decreases performance on memorisation based tasks and perplexity, question is how do we incorporate both of them into a single model?

Let the model we want, have layers. Let the model be where each has layers. So there are a total of blocks, each block containing layers, making our total number of layers . As we want the model to have a looping property, lets add a regularization term to the loss that forces parameters of all the blocks to be close to each other, but also not exactly the same so that we have a high parameter count and do well on tasks requiring memorization and in perplexity.

Denote a group of parameters by . maybe all Query matrices, feed forward matrices etc. Let denote the weights of of all layers.

The above term is the regularization loss. We force the cosine similarity of weights of the same group () to be similar to their neighbours. In this way we will be mimicking a looped model without actually looping and having unique parameters.

Thus, the final loss term is:

. We add the regulariser for all the groups of parameters. Our final loss is next token prediction loss and the regulariser with a constant .

would mean the standard training loss, and would make the model converge to the fully looped model after ever layers.

From the results, we can see that without compromising the perplexity, we get a slight improvement over reasoning tasks.

Authors show extensive theoretical proofs about looped models. They show that they are equivalent to scaling chain of thought. They show that looping can solve addition of arbitrary length that non-looped models can solve too. They show that looped models can simulate non-looped models. Breaking down the proofs and theoretical results is not practical and out of scope for this post.