Paper: arXiv

Authors: It’s a paper from Anthropic

Date of Publication: 4th November 2022

Overview

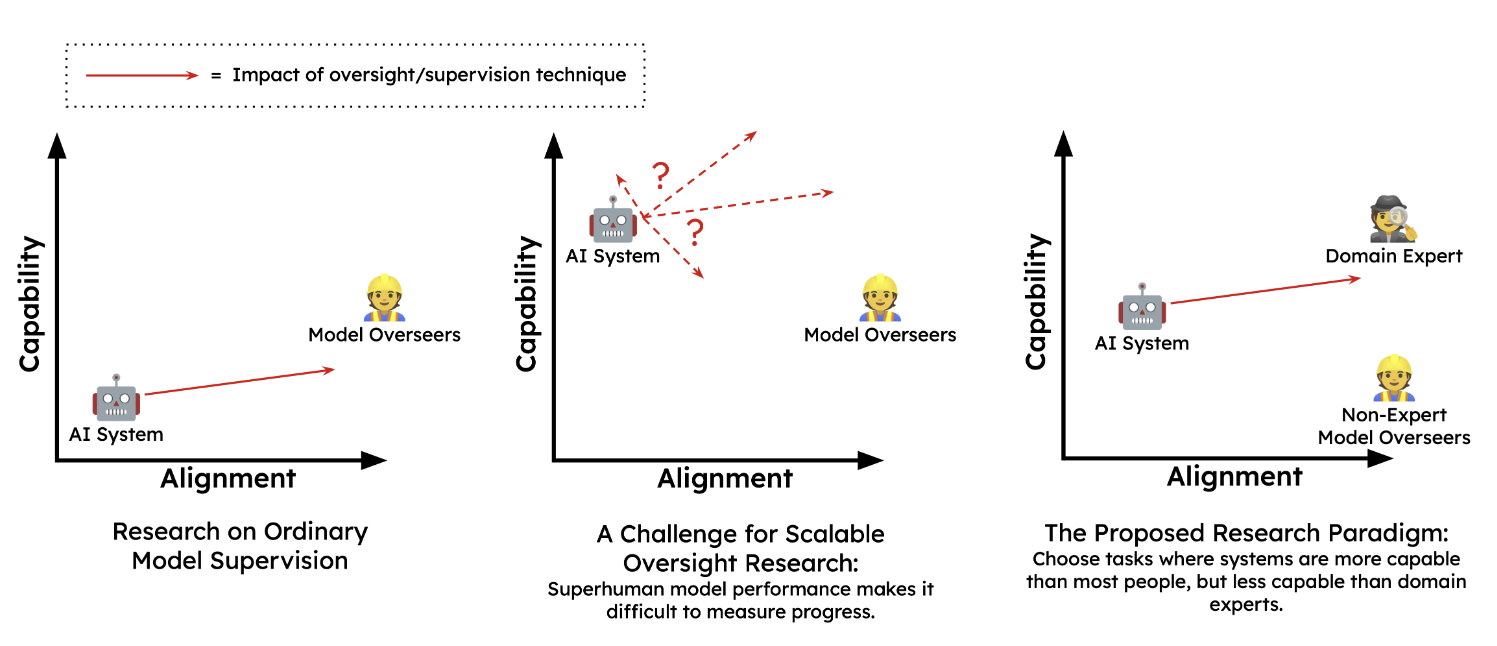

Scalable Oversight research aims at finding techniques to align superhuman models. Currently, we do not have a general superhuman model. So we employ the sandwiching paradigm. Under this paradigm, researchers choose problem settings where a model is already more capable than a typical human, but less capable than an expert. Non-expert human researchers aim to train and align a model to perform the task reliably without using any assistance from the experts. At the end, experts evaluate the degree to which non-experts succeeded. This can be used to evaluate how our current methods can potentially work in aligning super-intelligence.

In this paper, authors call a model as misaligned if it improves performance when finetuned or few-shot prompted on a task. Such a model already has prerequisite knowledge to solve the task at hand, but was misaligned and needed finetuning or few-shot prompting to make it aligned. Authors use MMLU and QuALITY benchmarks to measure misalignment of the models.

Authors relax the constraints of sandwiching paradigm. Instead of using human experts, they simply use the ground-truth labels of MMLU and QuALITY. Also non-expert researchers are prohibited from modifying the weights of model in any way and can just interact with the model as a chat assistant. Authors find that in this simple case humans are able to align models considerably.

Sandwiching - A Research Paradigm for Scalable Oversight

The goal of scalable oversight is difficult to pursue experimentally since current systems differ in many important ways from the future systems that we are most concerned with being able to align, such that scalable oversight techniques are often both unnecessary and cumbersome. Though there is some empirical work that can be done so that we can be prepared for aligning superhuman AI.

Under the sandwiching experimental paradigm, researchers choose a problem setting where a model is already better than a typical human but is not better than the experts. Nonexpert human research participants then attempt to train or align the model to perform the task reliably with

an artificial constraint: They may not use any help or input (including preexisting written materials) from the experts. The experts participate only at the end of each experimental cycle, when their role is to evaluate the degree to which the non-expert participants succeeded.

We can see that, in future we would find ourselves in the situation of non-expert researchers tasked to align models better than them at a given task.

Authors call a model misaligned, if it is capable of improving performance on a task after little interventions like fine-tuning on a high-quality dataset or few-shot prompting. It shows that the model already has most of the skills needed to succeed at the task, but it is misaligned and needed alignment.

Authors use MMLU and QuALITY benchmarks for this experiment. Both of them feature multiple choice questions with 4 choices. QuALITY has a time limit per question.

Non-expert participants are tasked to make the model aligned i.e. improve the performance of the model on the benchmarks and later experts would evaluate how well they have done.

Authors relax the sandwiching paradigm for this experiment.

Relaxation: Static Model: Sandwiching places no limitations in intervening with the weights of the model by non-expert humans. But in this experiment authors place such limitation and fix a static model. The participants can just interact with the model via a simple chat interface. The participants’ goal in this case is to reach the

highest level of performance on the task that is achievable through direct interaction with the model, rather than fine-tuning or otherwise modifying the model to cause it to perform well on its own as in the original paradigm.

This relaxation rules out many potential ways of scalable oversight strategies. But if the participants are successful in getting the desired behaviour from the model in this setting, then this provides a lower bound to how well they could have done when allowed to modify the weights of the model.

Relaxation: Labels in Place of Experts: Instead of using human experts, authors can simply use the labels of MMLU and QuALITY to evaluate how well the participants have ‘aligned’ the model.

Experiment

Authors use a 52B parameter model finetuned to act as a helpful dialogue assistant.

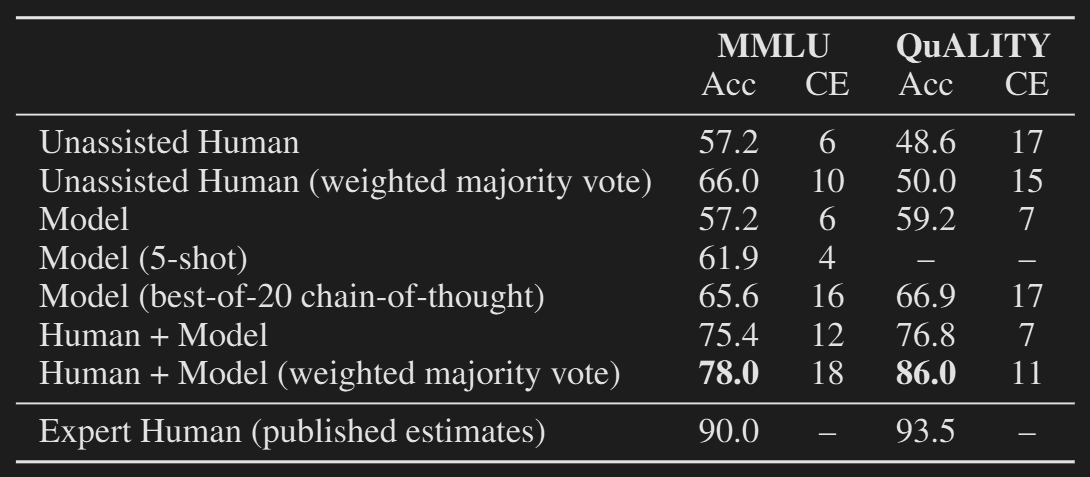

Unsurprisingly, giving humans access to the model increases performance (from 54% to 80% on MMLU; 38% to 75% on QuALITY).

Human–machine dialogues averaged 10.9 turns and 2.0 conversation resets on MMLU and 6.3 turns and 0.1 conversation resets on QuALITY, with the lower figures for QuALITY likely explained in substantial part by the use of a time limit.

Limitations

Authors do admit that this has many limitations, and we can’t just align a super-intelligent AI using a simple chat interface. “Our results are simply not strong enough to validate our simple human–model interaction protocol for use in high-stakes situations: Human participants in this protocol sometimes give highly confident judgments that turn out to be wrong. We sometimes see participants accepting false claims from the model makes that sound confident and plausible, since they have limited domain knowledge and limited ability to fact-check the model.”

This experiment was carried on multiple choice questions, and many similar questions are found on the internet. Authors expect scalable alignment to matter most in settings where a model is asked to help us do something more substantially different from what it was trained to do.

Thoughts

This paper seems so trivial. But it is actually quite important to formalize things and test out the most obvious methods first. Honestly I do think this will always remain a super effective method. If suppose LLMs ever reach super-intelligence, then monitoring chain of thought and just talking with them should do the job. But it’s not always feasible to always have a human to keep checking the model.

This works really well for simple Q/A style scenario but LLMs are becoming increasingly agentic, where we can’t have humans monitoring LLMs.

PS: They could have titled paper as: “Talking with LLMs is All You Need”